Swin transformer代码解读

Published:

swin transformer的官方代码地址:Swin-transformer

swin transformer模型的实现为:

class SwinTransformer(nn.Module):

r""" Swin Transformer

A PyTorch impl of : `Swin Transformer: Hierarchical Vision Transformer using Shifted Windows` -

https://arxiv.org/pdf/2103.14030

Args:

img_size (int | tuple(int)): Input image size. Default 224

patch_size (int | tuple(int)): Patch size. Default: 4

in_chans (int): Number of input image channels. Default: 3

num_classes (int): Number of classes for classification head. Default: 1000

embed_dim (int): Patch embedding dimension. Default: 96

depths (tuple(int)): Depth of each Swin Transformer layer.

num_heads (tuple(int)): Number of attention heads in different layers.

window_size (int): Window size. Default: 7

mlp_ratio (float): Ratio of mlp hidden dim to embedding dim. Default: 4

qkv_bias (bool): If True, add a learnable bias to query, key, value. Default: True

qk_scale (float): Override default qk scale of head_dim ** -0.5 if set. Default: None

drop_rate (float): Dropout rate. Default: 0

attn_drop_rate (float): Attention dropout rate. Default: 0

drop_path_rate (float): Stochastic depth rate. Default: 0.1

norm_layer (nn.Module): Normalization layer. Default: nn.LayerNorm.

ape (bool): If True, add absolute position embedding to the patch embedding. Default: False

patch_norm (bool): If True, add normalization after patch embedding. Default: True

use_checkpoint (bool): Whether to use checkpointing to save memory. Default: False

fused_window_process (bool, optional): If True, use one kernel to fused window shift & window partition for acceleration, similar for the reversed part. Default: False

"""

def __init__(self, img_size=224, patch_size=4, in_chans=3, num_classes=1000,

embed_dim=96, depths=[2, 2, 6, 2], num_heads=[3, 6, 12, 24],

window_size=7, mlp_ratio=4., qkv_bias=True, qk_scale=None,

drop_rate=0., attn_drop_rate=0., drop_path_rate=0.1,

norm_layer=nn.LayerNorm, ape=False, patch_norm=True,

use_checkpoint=False, fused_window_process=False, **kwargs):

super().__init__()

print(f'{img_size=}')

self.num_classes = num_classes

self.num_layers = len(depths)

self.embed_dim = embed_dim

self.ape = ape

self.patch_norm = patch_norm

self.num_features = int(embed_dim * 2 ** (self.num_layers - 1))

self.mlp_ratio = mlp_ratio

# split image into non-overlapping patches

self.patch_embed = PatchEmbed(

img_size=img_size, patch_size=patch_size, in_chans=in_chans, embed_dim=embed_dim,

norm_layer=norm_layer if self.patch_norm else None)

num_patches = self.patch_embed.num_patches

patches_resolution = self.patch_embed.patches_resolution

self.patches_resolution = patches_resolution

# absolute position embedding

if self.ape:

self.absolute_pos_embed = nn.Parameter(torch.zeros(1, num_patches, embed_dim))

trunc_normal_(self.absolute_pos_embed, std=.02)

self.pos_drop = nn.Dropout(p=drop_rate)

# stochastic depth

dpr = [x.item() for x in torch.linspace(0, drop_path_rate, sum(depths))] # stochastic depth decay rule

# build layers

self.layers = nn.ModuleList()

for i_layer in range(self.num_layers):

layer = BasicLayer(dim=int(embed_dim * 2 ** i_layer),

input_resolution=(patches_resolution[0] // (2 ** i_layer),

patches_resolution[1] // (2 ** i_layer)),

depth=depths[i_layer],

num_heads=num_heads[i_layer],

window_size=window_size,

mlp_ratio=self.mlp_ratio,

qkv_bias=qkv_bias, qk_scale=qk_scale,

drop=drop_rate, attn_drop=attn_drop_rate,

drop_path=dpr[sum(depths[:i_layer]):sum(depths[:i_layer + 1])],

norm_layer=norm_layer,

downsample=PatchMerging if (i_layer < self.num_layers - 1) else None,

use_checkpoint=use_checkpoint,

fused_window_process=fused_window_process)

self.layers.append(layer)

self.norm = norm_layer(self.num_features)

self.avgpool = nn.AdaptiveAvgPool1d(1)

self.head = nn.Linear(self.num_features, num_classes) if num_classes > 0 else nn.Identity()

self.apply(self._init_weights)

def _init_weights(self, m):

if isinstance(m, nn.Linear):

trunc_normal_(m.weight, std=.02)

if isinstance(m, nn.Linear) and m.bias is not None:

nn.init.constant_(m.bias, 0)

elif isinstance(m, nn.LayerNorm):

nn.init.constant_(m.bias, 0)

nn.init.constant_(m.weight, 1.0)

@torch.jit.ignore

def no_weight_decay(self):

return {'absolute_pos_embed'}

@torch.jit.ignore

def no_weight_decay_keywords(self):

return {'relative_position_bias_table'}

def forward_features(self, x):

x = self.patch_embed(x)

if self.ape:

x = x + self.absolute_pos_embed

x = self.pos_drop(x)

for layer in self.layers:

x = layer(x)

x = self.norm(x) # B L C

x = self.avgpool(x.transpose(1, 2)) # B C 1

x = torch.flatten(x, 1)

return x

def forward(self, x):

x = self.forward_features(x)

x = self.head(x)

return x

def flops(self):

flops = 0

flops += self.patch_embed.flops()

for i, layer in enumerate(self.layers):

flops += layer.flops()

flops += self.num_features * self.patches_resolution[0] * self.patches_resolution[1] // (2 ** self.num_layers)

flops += self.num_features * self.num_classes

return flops

我们以图像分类的应用为例展开说明,根据代码上下文,传过来的参数为:

img_size=224

patch_size=4

in_chans=3

num_classes=1000

embed_dim=96

depths=[2, 2, 6, 2]

num_heads=[3, 6, 12, 24]

window_size=7

mlp_ratio=4.0

qkv_bias=True

qk_scale=None

drop_rate=0.0

attn_drop_rate=0.0

drop_path_rate=0.2

ape=False

patch_norm=True

use_checkpoint=False

fused_window_process=False

kwargs={}

第一步是对整图做patch切分和embed

# split image into non-overlapping patches

self.patch_embed = PatchEmbed(

img_size=img_size, patch_size=patch_size, in_chans=in_chans, embed_dim=embed_dim,

norm_layer=norm_layer if self.patch_norm else None)

num_patches = self.patch_embed.num_patches

patches_resolution = self.patch_embed.patches_resolution

self.patches_resolution = patches_resolution

class PatchEmbed(nn.Module):

r""" Image to Patch Embedding

Args:

img_size (int): Image size. Default: 224.

patch_size (int): Patch token size. Default: 4.

in_chans (int): Number of input image channels. Default: 3.

embed_dim (int): Number of linear projection output channels. Default: 96.

norm_layer (nn.Module, optional): Normalization layer. Default: None

"""

def __init__(self, img_size=224, patch_size=4, in_chans=3, embed_dim=96, norm_layer=None):

super().__init__()

img_size = to_2tuple(img_size)

patch_size = to_2tuple(patch_size)

patches_resolution = [img_size[0] // patch_size[0], img_size[1] // patch_size[1]]

self.img_size = img_size

self.patch_size = patch_size

self.patches_resolution = patches_resolution

self.num_patches = patches_resolution[0] * patches_resolution[1]

self.in_chans = in_chans

self.embed_dim = embed_dim

self.proj = nn.Conv2d(in_chans, embed_dim, kernel_size=patch_size, stride=patch_size)

if norm_layer is not None:

self.norm = norm_layer(embed_dim)

else:

self.norm = None

def forward(self, x):

B, C, H, W = x.shape

# FIXME look at relaxing size constraints

assert H == self.img_size[0] and W == self.img_size[1], \

f"Input image size ({H}*{W}) doesn't match model ({self.img_size[0]}*{self.img_size[1]})."

x = self.proj(x).flatten(2).transpose(1, 2) # B Ph*Pw C

if self.norm is not None:

x = self.norm(x)

return x

def flops(self):

Ho, Wo = self.patches_resolution

flops = Ho * Wo * self.embed_dim * self.in_chans * (self.patch_size[0] * self.patch_size[1])

if self.norm is not None:

flops += Ho * Wo * self.embed_dim

return flops

PatchEmbed 类解析

PatchEmbed 是一个将输入图像转化为嵌入表示(Patch Embedding)的模块,常用于 Vision Transformer(ViT)等模型中。下面对代码进行详细解析。

类功能

目的: 将输入图像分割为小块(Patch),并将每个 Patch 映射到一个高维向量空间,形成嵌入表示。

关键步骤:

- 分割图像: 使用卷积操作模拟分割,将输入图像按指定的

patch_size分割成小块。 - 线性映射: 通过卷积的通道扩展,将每个 Patch 的像素信息映射到高维嵌入空间。

- 可选归一化: 对嵌入后的特征向量进行归一化处理(可选)。

- 分割图像: 使用卷积操作模拟分割,将输入图像按指定的

代码详解

1. 初始化方法 __init__

def __init__(self, img_size=224, patch_size=4, in_chans=3, embed_dim=96, norm_layer=None):

参数

img_size: 输入图像的大小(假设为正方形)。默认值为 224。patch_size: Patch 的大小,每个 Patch 的宽和高。默认值为 4。in_chans: 输入图像的通道数,例如 RGB 图像通道数为 3。embed_dim: 每个 Patch 映射后的嵌入维度大小。norm_layer: 一个可选的归一化层(例如nn.LayerNorm),对嵌入特征进行归一化。

实现细节

- 图像大小和 Patch 分辨率:

img_size = to_2tuple(img_size) patch_size = to_2tuple(patch_size) patches_resolution = [img_size[0] // patch_size[0], img_size[1] // patch_size[1]]- 将图像大小和 Patch 大小转化为元组形式(支持非正方形)。

- 计算每个方向上的 Patch 数量,得到 Patch 的分辨率。

- 属性初始化:

self.num_patches = patches_resolution[0] * patches_resolution[1]self.num_patches: Patch 的总数量。

- 卷积操作用于线性映射:

self.proj = nn.Conv2d(in_chans, embed_dim, kernel_size=patch_size, stride=patch_size)- 使用卷积操作分割图像,同时对每个 Patch 进行通道扩展到

embed_dim。

- 使用卷积操作分割图像,同时对每个 Patch 进行通道扩展到

- 归一化层(可选):

if norm_layer is not None: self.norm = norm_layer(embed_dim) else: self.norm = None

2. 前向传播方法 forward

def forward(self, x):

输入:

x: 输入图像张量,形状为(B, C, H, W),其中:B: 批量大小。C: 通道数。H,W: 图像的高和宽。

处理步骤:

- 检查输入大小:

assert H == self.img_size[0] and W == self.img_size[1], \ f"Input image size ({H}*{W}) doesn't match model ({self.img_size[0]}*{self.img_size[1]})."- 确保输入图像的大小与模型中定义的

img_size匹配。

- 确保输入图像的大小与模型中定义的

- 卷积映射:

x = self.proj(x).flatten(2).transpose(1, 2) # B, Ph*Pw, Cself.proj(x):- 通过卷积分割图像并扩展维度。

- 输出形状为

(B, embed_dim, Ho, Wo),其中Ho和Wo是 Patch 的分辨率。

.flatten(2):- 将最后两个维度(

Ho和Wo)展平为一个维度。 - 输出形状为

(B, embed_dim, num_patches)。

- 将最后两个维度(

.transpose(1, 2):- 调整维度顺序,输出形状为

(B, num_patches, embed_dim)。

- 调整维度顺序,输出形状为

- 归一化(可选):

if self.norm is not None: x = self.norm(x)

输出:

- 返回形状为

(B, num_patches, embed_dim)的嵌入张量。

3. 计算 FLOPs

def flops(self):

- 功能: 计算该模块的浮点运算数(FLOPs)。

- 公式:

flops = Ho * Wo * self.embed_dim * self.in_chans * (self.patch_size[0] * self.patch_size[1]) if self.norm is not None: flops += Ho * Wo * self.embed_dim- 卷积部分 FLOPs:

- 每个 Patch 的计算量为

embed_dim * in_chans * patch_size[0] * patch_size[1]。 - 总数为

Ho * Wo个 Patch 的总计算量。

- 每个 Patch 的计算量为

- 归一化部分 FLOPs:

- 如果有归一化层,为每个特征向量执行一次归一化操作,计算量为

Ho * Wo * embed_dim。

- 如果有归一化层,为每个特征向量执行一次归一化操作,计算量为

- 卷积部分 FLOPs:

总结

- 核心功能:

- 利用卷积操作分割图像并生成 Patch 嵌入表示。

- 灵活性:

- 支持可选的归一化层。

- 可处理非正方形图像和 Patch。

- 效率:

- 提供了计算 FLOPs 的方法,便于性能分析。

这里要特别注意,这里做映射的卷积层的参数,self.proj = nn.Conv2d(in_chans, embed_dim, kernel_size=patch_size, stride=patch_size), kernel_size=patch_size, stride=patch_size,在当前的任务中,patch_size=4,也就是这个conv层是对每个patch进行的处理

第二步是绝对位置编码

# absolute position embedding

if self.ape:

self.absolute_pos_embed = nn.Parameter(torch.zeros(1, num_patches, embed_dim))

trunc_normal_(self.absolute_pos_embed, std=.02)

这段代码实现了绝对位置嵌入(Absolute Position Embedding)的初始化,用于在特征表示中加入位置信息,以增强模型对输入顺序的感知能力。以下是详细解析:

代码解析

1. 条件判断

if self.ape:

self.ape是一个布尔值参数,表示是否启用绝对位置嵌入(Absolute Position Embedding)。- 如果

ape=True,模型将使用绝对位置嵌入;否则跳过此部分。

2. 创建位置嵌入参数

self.absolute_pos_embed = nn.Parameter(torch.zeros(1, num_patches, embed_dim))

nn.Parameter:- 将一个张量声明为可学习参数,使其在模型训练时自动更新。

- 形状解释:

(1, num_patches, embed_dim):1: 批量维度,表示这是一组共享的绝对位置嵌入。num_patches: Patch 的总数,每个 Patch 分配一个位置嵌入。embed_dim: 每个位置嵌入的维度,与 Patch 嵌入的维度一致。

- 初始化为全零张量,表示初始状态下所有位置嵌入相同。

3. 使用截断正态分布初始化

trunc_normal_(self.absolute_pos_embed, std=.02)

- 目的:

- 为位置嵌入赋予随机值,避免所有位置嵌入初始完全相同。

- 使用截断正态分布(Truncated Normal Distribution)可以限制初始化值的范围,避免极端值导致训练不稳定。

- 参数:

std=.02: 标准差为 0.02,控制初始化值的分布范围。

绝对位置嵌入的作用

- 增强位置感知能力:

- Transformer 模型本质上是无序的(Permutation Invariant),对输入的顺序没有直接感知能力。

- 添加绝对位置嵌入后,每个 Patch 的位置信息被编码进嵌入表示中,从而为模型提供输入的顺序感知。

- 实现方式:

- 在特征表示中加上与位置对应的嵌入向量,类似于 Transformer 在自然语言处理(NLP)中的位置编码(Positional Encoding)。

总结

- 这段代码通过

nn.Parameter和截断正态分布初始化,创建了可学习的绝对位置嵌入。 - 如果启用了

ape,模型会为每个 Patch 分配一个位置嵌入,用于增强对位置信息的建模能力。

第三步值得注意的是stochastic depth decay rule

dpr = [x.item() for x in torch.linspace(0, drop_path_rate, sum(depths))] # stochastic depth decay rule

这行代码用于计算 Stochastic Depth (Drop Path) 的衰减概率(dpr,drop path rate),其目的是为每个层动态分配一个随机深度丢弃的概率,促进模型的正则化和泛化能力。

代码详解

dpr = [x.item() for x in torch.linspace(0, drop_path_rate, sum(depths))]

1. 参数说明

drop_path_rate:- 最大丢弃概率(随机深度的最终值)。

- 用于控制 Stochastic Depth 的强度,通常在

0.0(不使用)到0.5之间。

depths:- 一个列表,表示每个 Swin Transformer block 的深度(层数)。

- 例如,

depths = [2, 2, 6, 2],表示模型有 4 个阶段,每个阶段分别包含 2、2、6、2 个层。

2. torch.linspace 的作用

torch.linspace(0, drop_path_rate, sum(depths))

- 生成线性空间:

- 创建一个从

0到drop_path_rate的等差数列,长度为sum(depths)。

- 创建一个从

- 数量与层数匹配:

- 总长度

sum(depths)表示模型中所有 Transformer block 的总层数。 - 确保每一层都有一个唯一的 Drop Path 概率。

- 总长度

3. x.item() 的作用

- 将每个

torch.Tensor转换为 Python 的浮点数。 - 最终

dpr是一个普通的 Python 列表,包含每一层的 Drop Path 概率。

4. 示例计算

假设:

drop_path_rate = 0.1

depths = [2, 2, 6, 2]

sum(depths) = 2 + 2 + 6 + 2 = 12

计算:

torch.linspace(0, 0.1, 12)

# 输出: tensor([0.0000, 0.0091, 0.0182, 0.0273, 0.0364, 0.0455, 0.0545, 0.0636, 0.0727, 0.0818, 0.0909, 0.1000])

转为列表:

dpr = [0.0, 0.0091, 0.0182, 0.0273, 0.0364, 0.0455, 0.0545, 0.0636, 0.0727, 0.0818, 0.0909, 0.1]

用途:Stochastic Depth

- Stochastic Depth 是一种正则化技术:

- 在训练期间随机跳过(丢弃)部分层的前向计算。

- 通过随机深度减弱模型的依赖性,使模型对不同路径更鲁棒。

- 在推理阶段,所有层都会被激活。

分配 Drop Path 概率

dpr为每一层分配了一个渐增的丢弃概率:- 第一层的概率为

0.0(不丢弃)。 - 最后一层的概率为

drop_path_rate(最大丢弃概率)。 - 中间层的概率线性递增。

- 第一层的概率为

总结

这行代码实现了 Stochastic Depth 的概率分配规则,确保每一层有独立的丢弃概率,并且随层数增加而逐渐增大,从而实现更有效的正则化。

在我们当前的任务代码中,dpr的值为

dpr=[0.0, 0.0181818176060915, 0.036363635212183, 0.05454545468091965, 0.072727270424366, 0.09090908616781235, 0.10909091681241989, 0.12727272510528564, 0.1454545557498932, 0.16363637149333954, 0.1818181872367859, 0.20000000298023224]

后面是构建多个BasicLayer

self.layers = nn.ModuleList()

for i_layer in range(self.num_layers):

print(f'{i_layer=}')

layer = BasicLayer(dim=int(embed_dim * 2 ** i_layer),

input_resolution=(patches_resolution[0] // (2 ** i_layer),

patches_resolution[1] // (2 ** i_layer)),

depth=depths[i_layer],

num_heads=num_heads[i_layer],

window_size=window_size,

mlp_ratio=self.mlp_ratio,

qkv_bias=qkv_bias, qk_scale=qk_scale,

drop=drop_rate, attn_drop=attn_drop_rate,

drop_path=dpr[sum(depths[:i_layer]):sum(depths[:i_layer + 1])],

norm_layer=norm_layer,

downsample=PatchMerging if (i_layer < self.num_layers - 1) else None,

use_checkpoint=use_checkpoint,

fused_window_process=fused_window_process)

self.layers.append(layer)

BasicLayer的实现为

class BasicLayer(nn.Module):

""" A basic Swin Transformer layer for one stage.

Args:

dim (int): Number of input channels.

input_resolution (tuple[int]): Input resolution.

depth (int): Number of blocks.

num_heads (int): Number of attention heads.

window_size (int): Local window size.

mlp_ratio (float): Ratio of mlp hidden dim to embedding dim.

qkv_bias (bool, optional): If True, add a learnable bias to query, key, value. Default: True

qk_scale (float | None, optional): Override default qk scale of head_dim ** -0.5 if set.

drop (float, optional): Dropout rate. Default: 0.0

attn_drop (float, optional): Attention dropout rate. Default: 0.0

drop_path (float | tuple[float], optional): Stochastic depth rate. Default: 0.0

norm_layer (nn.Module, optional): Normalization layer. Default: nn.LayerNorm

downsample (nn.Module | None, optional): Downsample layer at the end of the layer. Default: None

use_checkpoint (bool): Whether to use checkpointing to save memory. Default: False.

fused_window_process (bool, optional): If True, use one kernel to fused window shift & window partition for acceleration, similar for the reversed part. Default: False

"""

def __init__(self, dim, input_resolution, depth, num_heads, window_size,

mlp_ratio=4., qkv_bias=True, qk_scale=None, drop=0., attn_drop=0.,

drop_path=0., norm_layer=nn.LayerNorm, downsample=None, use_checkpoint=False,

fused_window_process=False):

super().__init__()

self.dim = dim

self.input_resolution = input_resolution

self.depth = depth

self.use_checkpoint = use_checkpoint

# build blocks

self.blocks = nn.ModuleList([

SwinTransformerBlock(dim=dim, input_resolution=input_resolution,

num_heads=num_heads, window_size=window_size,

shift_size=0 if (i % 2 == 0) else window_size // 2,

mlp_ratio=mlp_ratio,

qkv_bias=qkv_bias, qk_scale=qk_scale,

drop=drop, attn_drop=attn_drop,

drop_path=drop_path[i] if isinstance(drop_path, list) else drop_path,

norm_layer=norm_layer,

fused_window_process=fused_window_process)

for i in range(depth)])

# patch merging layer

if downsample is not None:

self.downsample = downsample(input_resolution, dim=dim, norm_layer=norm_layer)

else:

self.downsample = None

def forward(self, x):

for blk in self.blocks:

if self.use_checkpoint:

x = checkpoint.checkpoint(blk, x)

else:

x = blk(x)

if self.downsample is not None:

x = self.downsample(x)

return x

def extra_repr(self) -> str:

return f"dim={self.dim}, input_resolution={self.input_resolution}, depth={self.depth}"

def flops(self):

flops = 0

for blk in self.blocks:

flops += blk.flops()

if self.downsample is not None:

flops += self.downsample.flops()

return flops

我们这个实例里面,self.num_layers=4。我们看传入每个BasicLayer传入的参数为

i_layer=0

dim=96

input_resolution=(56, 56)

depth=2

num_heads=3

window_size=7

mlp_ratio=4.0

qkv_bias=True

qk_scale=None

drop=0.0

attn_drop=0.0

drop_path=[0.0, 0.0181818176060915]

norm_layer=<class 'torch.nn.modules.normalization.LayerNorm'>

downsample=<class 'models.swin_transformer.PatchMerging'>

use_checkpoint=False

fused_window_process=False

i_layer=1

dim=192

input_resolution=(28, 28)

depth=2

num_heads=6

window_size=7

mlp_ratio=4.0

qkv_bias=True

qk_scale=None

drop=0.0

attn_drop=0.0

drop_path=[0.036363635212183, 0.05454545468091965]

norm_layer=<class 'torch.nn.modules.normalization.LayerNorm'>

downsample=<class 'models.swin_transformer.PatchMerging'>

use_checkpoint=False

fused_window_process=False

i_layer=2

dim=384

input_resolution=(14, 14)

depth=6

num_heads=12

window_size=7

mlp_ratio=4.0

qkv_bias=True

qk_scale=None

drop=0.0

attn_drop=0.0

drop_path=[0.072727270424366, 0.09090908616781235, 0.10909091681241989, 0.12727272510528564, 0.1454545557498932, 0.16363637149333954]

norm_layer=<class 'torch.nn.modules.normalization.LayerNorm'>

downsample=<class 'models.swin_transformer.PatchMerging'>

use_checkpoint=False

fused_window_process=False

i_layer=3

dim=768

input_resolution=(7, 7)

depth=2

num_heads=24

window_size=7

mlp_ratio=4.0

qkv_bias=True

qk_scale=None

drop=0.0

attn_drop=0.0

drop_path=[0.1818181872367859, 0.20000000298023224]

norm_layer=<class 'torch.nn.modules.normalization.LayerNorm'>

downsample=None

use_checkpoint=False

fused_window_process=False

BasicLayer类的实现很简单。

代码功能解析

BasicLayer 是 Swin Transformer 中的一个基本构建模块,用于一个阶段的 Transformer 操作。它由多个 Swin Transformer Blocks (SwinTransformerBlock) 和一个可选的降采样模块 (downsample) 组成。

参数解析

dim: 输入特征图的通道数。input_resolution: 输入特征图的分辨率,格式为(H, W)。depth: 该阶段包含的 Swin Transformer Block 的数量。num_heads: 多头注意力机制中的注意力头数。window_size: 窗口注意力的窗口大小。mlp_ratio: MLP 层隐藏层维度与嵌入维度的比例。qkv_bias: 是否为 Query、Key、Value 添加可学习偏置。qk_scale: Query 和 Key 的缩放因子,默认为head_dim ** -0.5。drop: Dropout 概率。attn_drop: 注意力机制中的 Dropout 概率。drop_path: 随机深度的丢弃率,可以是单一值或每层的列表。norm_layer: 正则化层类型,默认是nn.LayerNorm。downsample: 是否在这一阶段结束后执行降采样。use_checkpoint: 是否启用检查点机制以节省显存。fused_window_process: 是否使用优化的窗口处理加速推理。

代码解析

1. 构造函数

- 保存输入参数

self.dim = dim self.input_resolution = input_resolution self.depth = depth self.use_checkpoint = use_checkpoint - 构建 Swin Transformer Blocks

- 使用

nn.ModuleList存储多个SwinTransformerBlock。 - 每个块的

shift_size交替为0(不偏移)和window_size // 2(窗口偏移)。 drop_path支持为单一值或列表,若为列表,则为每层分配不同的 Drop Path 概率。self.blocks = nn.ModuleList([ SwinTransformerBlock( dim=dim, input_resolution=input_resolution, num_heads=num_heads, window_size=window_size, shift_size=0 if (i % 2 == 0) else window_size // 2, mlp_ratio=mlp_ratio, qkv_bias=qkv_bias, qk_scale=qk_scale, drop=drop, attn_drop=attn_drop, drop_path=drop_path[i] if isinstance(drop_path, list) else drop_path, norm_layer=norm_layer, fused_window_process=fused_window_process ) for i in range(depth) ])

- 使用

- 构建降采样层

- 如果

downsample不为None,在当前阶段最后添加一个降采样模块。if downsample is not None: self.downsample = downsample(input_resolution, dim=dim, norm_layer=norm_layer) else: self.downsample = None

- 如果

2. 前向传播

- 通过每个 Swin Transformer Block

- 如果启用检查点机制,用

torch.utils.checkpoint保存中间结果,减少显存占用。for blk in self.blocks: if self.use_checkpoint: x = checkpoint.checkpoint(blk, x) else: x = blk(x)

- 如果启用检查点机制,用

- 降采样处理

- 若降采样模块存在,对输出进行降采样。

if self.downsample is not None: x = self.downsample(x)

- 若降采样模块存在,对输出进行降采样。

- 返回结果

return x

3. 额外功能

extra_repr- 提供额外的信息显示模块参数,便于调试和模型结构查看。

def extra_repr(self) -> str: return f"dim={self.dim}, input_resolution={self.input_resolution}, depth={self.depth}"

- 提供额外的信息显示模块参数,便于调试和模型结构查看。

- 计算 FLOPs

- 计算当前层的总计算量(FLOPs),包括每个 Swin Transformer Block 和降采样模块的计算量。

def flops(self): flops = 0 for blk in self.blocks: flops += blk.flops() if self.downsample is not None: flops += self.downsample.flops() return flops

- 计算当前层的总计算量(FLOPs),包括每个 Swin Transformer Block 和降采样模块的计算量。

代码总结

BasicLayer 是 Swin Transformer 的一个重要模块,负责:

- 处理多个 Swin Transformer Block。

- 按照指定的

shift_size实现窗口注意力的平铺和偏移。 - 在指定阶段结束后,执行降采样以减少特征图分辨率。

通过模块化设计,BasicLayer 灵活适应不同阶段的输入特征尺寸、深度和降采样需求,适用于 Swin Transformer 的分层结构。

BasicLayer中的SwinTransformerBlock

SwinTransformerBlock是核心,其实现为

class SwinTransformerBlock(nn.Module):

r""" Swin Transformer Block.

Args:

dim (int): Number of input channels.

input_resolution (tuple[int]): Input resulotion.

num_heads (int): Number of attention heads.

window_size (int): Window size.

shift_size (int): Shift size for SW-MSA.

mlp_ratio (float): Ratio of mlp hidden dim to embedding dim.

qkv_bias (bool, optional): If True, add a learnable bias to query, key, value. Default: True

qk_scale (float | None, optional): Override default qk scale of head_dim ** -0.5 if set.

drop (float, optional): Dropout rate. Default: 0.0

attn_drop (float, optional): Attention dropout rate. Default: 0.0

drop_path (float, optional): Stochastic depth rate. Default: 0.0

act_layer (nn.Module, optional): Activation layer. Default: nn.GELU

norm_layer (nn.Module, optional): Normalization layer. Default: nn.LayerNorm

fused_window_process (bool, optional): If True, use one kernel to fused window shift & window partition for acceleration, similar for the reversed part. Default: False

"""

def __init__(self, dim, input_resolution, num_heads, window_size=7, shift_size=0,

mlp_ratio=4., qkv_bias=True, qk_scale=None, drop=0., attn_drop=0., drop_path=0.,

act_layer=nn.GELU, norm_layer=nn.LayerNorm,

fused_window_process=False):

super().__init__()

self.dim = dim

self.input_resolution = input_resolution

self.num_heads = num_heads

self.window_size = window_size

self.shift_size = shift_size

self.mlp_ratio = mlp_ratio

if min(self.input_resolution) <= self.window_size:

# if window size is larger than input resolution, we don't partition windows

self.shift_size = 0

self.window_size = min(self.input_resolution)

assert 0 <= self.shift_size < self.window_size, "shift_size must in 0-window_size"

self.norm1 = norm_layer(dim)

self.attn = WindowAttention(

dim, window_size=to_2tuple(self.window_size), num_heads=num_heads,

qkv_bias=qkv_bias, qk_scale=qk_scale, attn_drop=attn_drop, proj_drop=drop)

self.drop_path = DropPath(drop_path) if drop_path > 0. else nn.Identity()

self.norm2 = norm_layer(dim)

mlp_hidden_dim = int(dim * mlp_ratio)

self.mlp = Mlp(in_features=dim, hidden_features=mlp_hidden_dim, act_layer=act_layer, drop=drop)

if self.shift_size > 0:

# calculate attention mask for SW-MSA

H, W = self.input_resolution

img_mask = torch.zeros((1, H, W, 1)) # 1 H W 1

h_slices = (slice(0, -self.window_size),

slice(-self.window_size, -self.shift_size),

slice(-self.shift_size, None))

w_slices = (slice(0, -self.window_size),

slice(-self.window_size, -self.shift_size),

slice(-self.shift_size, None))

cnt = 0

for h in h_slices:

for w in w_slices:

img_mask[:, h, w, :] = cnt

cnt += 1

mask_windows = window_partition(img_mask, self.window_size) # nW, window_size, window_size, 1

mask_windows = mask_windows.view(-1, self.window_size * self.window_size)

attn_mask = mask_windows.unsqueeze(1) - mask_windows.unsqueeze(2)

attn_mask = attn_mask.masked_fill(attn_mask != 0, float(-100.0)).masked_fill(attn_mask == 0, float(0.0))

else:

attn_mask = None

self.register_buffer("attn_mask", attn_mask)

self.fused_window_process = fused_window_process

def forward(self, x):

H, W = self.input_resolution

B, L, C = x.shape

assert L == H * W, "input feature has wrong size"

shortcut = x

x = self.norm1(x)

x = x.view(B, H, W, C)

# cyclic shift

if self.shift_size > 0:

if not self.fused_window_process:

shifted_x = torch.roll(x, shifts=(-self.shift_size, -self.shift_size), dims=(1, 2))

# partition windows

x_windows = window_partition(shifted_x, self.window_size) # nW*B, window_size, window_size, C

else:

x_windows = WindowProcess.apply(x, B, H, W, C, -self.shift_size, self.window_size)

else:

shifted_x = x

# partition windows

x_windows = window_partition(shifted_x, self.window_size) # nW*B, window_size, window_size, C

x_windows = x_windows.view(-1, self.window_size * self.window_size, C) # nW*B, window_size*window_size, C

# W-MSA/SW-MSA

attn_windows = self.attn(x_windows, mask=self.attn_mask) # nW*B, window_size*window_size, C

# merge windows

attn_windows = attn_windows.view(-1, self.window_size, self.window_size, C)

# reverse cyclic shift

if self.shift_size > 0:

if not self.fused_window_process:

shifted_x = window_reverse(attn_windows, self.window_size, H, W) # B H' W' C

x = torch.roll(shifted_x, shifts=(self.shift_size, self.shift_size), dims=(1, 2))

else:

x = WindowProcessReverse.apply(attn_windows, B, H, W, C, self.shift_size, self.window_size)

else:

shifted_x = window_reverse(attn_windows, self.window_size, H, W) # B H' W' C

x = shifted_x

x = x.view(B, H * W, C)

x = shortcut + self.drop_path(x)

# FFN

x = x + self.drop_path(self.mlp(self.norm2(x)))

return x

def extra_repr(self) -> str:

return f"dim={self.dim}, input_resolution={self.input_resolution}, num_heads={self.num_heads}, " \

f"window_size={self.window_size}, shift_size={self.shift_size}, mlp_ratio={self.mlp_ratio}"

def flops(self):

flops = 0

H, W = self.input_resolution

# norm1

flops += self.dim * H * W

# W-MSA/SW-MSA

nW = H * W / self.window_size / self.window_size

flops += nW * self.attn.flops(self.window_size * self.window_size)

# mlp

flops += 2 * H * W * self.dim * self.dim * self.mlp_ratio

# norm2

flops += self.dim * H * W

return flops

代码功能解析

SwinTransformerBlock 是 Swin Transformer 中的核心模块之一,负责实现窗口多头自注意力机制(Window Multi-Head Self-Attention, W-MSA)以及其平移版本(Shifted Window MSA, SW-MSA)。它通过分块处理来减少计算量,并通过移位机制引入跨窗口的信息交互。

代码功能

- 窗口划分与注意力机制

- 通过窗口划分(

window_partition)限制注意力计算的范围,减少计算开销。 - 通过移位机制(

shift_size)使相邻窗口之间的信息得以交互。

- 通过窗口划分(

- 残差连接和前馈网络

- 块内使用两次残差连接分别处理注意力和前馈网络(Feed-Forward Network, FFN)。

- 引入随机深度(

DropPath)来增强模型的泛化能力。

- 掩码机制

- 对于 SW-MSA,需要计算窗口之间的注意力掩码,防止非相邻窗口之间的注意力计算。

代码解析

1. 初始化

- 输入参数

- 保存输入维度、分辨率、注意力头数、窗口大小、移位大小等参数。

- 当输入分辨率小于窗口大小时,调整

window_size和shift_size。if min(self.input_resolution) <= self.window_size: self.shift_size = 0 self.window_size = min(self.input_resolution) assert 0 <= self.shift_size < self.window_size, "shift_size must in 0-window_size"

- 组件构建

- 规范化层:

self.norm1和self.norm2。 - 窗口注意力:

WindowAttention,处理 W-MSA 和 SW-MSA。 - DropPath:随机深度丢弃。

- MLP:前馈网络,隐藏层维度为

dim * mlp_ratio。self.norm1 = norm_layer(dim) self.attn = WindowAttention(...) self.drop_path = DropPath(drop_path) if drop_path > 0. else nn.Identity() self.norm2 = norm_layer(dim) self.mlp = Mlp(...)

- 规范化层:

- 注意力掩码

- 当

shift_size > 0时,计算注意力掩码,用于限制窗口之间的注意力计算。 - 掩码计算流程:

- 创建形状为

(1, H, W, 1)的掩码矩阵img_mask。 - 将掩码划分为窗口并拉平。

- 计算窗口之间的掩码偏差并设置遮挡值。

img_mask = torch.zeros((1, H, W, 1)) h_slices = (slice(0, -self.window_size), ...) for h in h_slices: for w in w_slices: img_mask[:, h, w, :] = cnt cnt += 1 mask_windows = window_partition(img_mask, self.window_size).view(-1, self.window_size * self.window_size) attn_mask = mask_windows.unsqueeze(1) - mask_windows.unsqueeze(2) attn_mask = attn_mask.masked_fill(attn_mask != 0, float(-100.0)).masked_fill(attn_mask == 0, float(0.0))

- 创建形状为

- 当

2. 前向传播

- 规范化与重塑

- 对输入进行规范化(

norm1),并重塑为特征图形状(B, H, W, C)。x = self.norm1(x) x = x.view(B, H, W, C)

- 对输入进行规范化(

- 窗口划分与移位

- 如果

shift_size > 0,对特征图进行循环平移(torch.roll)。 - 将特征图划分为窗口,形状为

(nW*B, window_size, window_size, C)。shifted_x = torch.roll(x, shifts=(-self.shift_size, -self.shift_size), dims=(1, 2)) x_windows = window_partition(shifted_x, self.window_size)

- 如果

- 窗口注意力计算

- 对每个窗口计算多头自注意力,输出形状为

(nW*B, window_size, window_size, C)。attn_windows = self.attn(x_windows, mask=self.attn_mask)

- 对每个窗口计算多头自注意力,输出形状为

- 窗口合并与反移位

- 将窗口结果合并为完整特征图,并进行逆移位操作。

attn_windows = attn_windows.view(-1, self.window_size, self.window_size, C) shifted_x = window_reverse(attn_windows, self.window_size, H, W) x = torch.roll(shifted_x, shifts=(self.shift_size, self.shift_size), dims=(1, 2))

- 将窗口结果合并为完整特征图,并进行逆移位操作。

- 残差连接与前馈网络

- 两次残差连接,分别用于处理注意力和前馈网络。

x = shortcut + self.drop_path(x) x = x + self.drop_path(self.mlp(self.norm2(x)))

- 两次残差连接,分别用于处理注意力和前馈网络。

3. 额外功能

extra_repr- 返回模块的关键信息,便于调试和模型查看。

def extra_repr(self) -> str: return f"dim={self.dim}, input_resolution={self.input_resolution}, num_heads={self.num_heads}, " \ f"window_size={self.window_size}, shift_size={self.shift_size}, mlp_ratio={self.mlp_ratio}"

- 返回模块的关键信息,便于调试和模型查看。

- 计算 FLOPs

- 计算该块的浮点操作数(FLOPs),包括规范化、注意力、MLP 的计算量。

def flops(self): flops = 0 flops += self.dim * H * W # norm1 nW = H * W / self.window_size / self.window_size flops += nW * self.attn.flops(self.window_size * self.window_size) # W-MSA/SW-MSA flops += 2 * H * W * self.dim * self.dim * self.mlp_ratio # MLP flops += self.dim * H * W # norm2 return flops

- 计算该块的浮点操作数(FLOPs),包括规范化、注意力、MLP 的计算量。

代码总结

SwinTransformerBlock 的核心功能是:

- 在局部窗口内计算多头自注意力(W-MSA)。

- 通过移位机制(SW-MSA)实现跨窗口的信息交互。

- 结合残差连接和前馈网络增强特征表达能力。

这种设计有效平衡了计算开销和全局信息建模能力,为 Swin Transformer 实现高效的分层注意力机制奠定了基础。

传到SwinTtansformerBlock中的初始值为:

dim=96

input_resolution=(56, 56)

num_heads=3

window_size=7

shift_size=0

mlp_ratio=4.0

qkv_bias=True

qk_scale=None

drop=0.0

attn_drop=0.0

drop_path=0.0

fused_window_process=False

act_layer=<class 'torch.nn.modules.activation.GELU'>

norm_layer=<class 'torch.nn.modules.normalization.LayerNorm'>

dim=96

input_resolution=(56, 56)

num_heads=3

window_size=7

shift_size=3

mlp_ratio=4.0

qkv_bias=True

qk_scale=None

drop=0.0

attn_drop=0.0

drop_path=0.0181818176060915

fused_window_process=False

act_layer=<class 'torch.nn.modules.activation.GELU'>

norm_layer=<class 'torch.nn.modules.normalization.LayerNorm'>

dim=192

input_resolution=(28, 28)

num_heads=6

window_size=7

shift_size=0

mlp_ratio=4.0

qkv_bias=True

qk_scale=None

drop=0.0

attn_drop=0.0

drop_path=0.036363635212183

fused_window_process=False

act_layer=<class 'torch.nn.modules.activation.GELU'>

norm_layer=<class 'torch.nn.modules.normalization.LayerNorm'>

dim=192

input_resolution=(28, 28)

num_heads=6

window_size=7

shift_size=3

mlp_ratio=4.0

qkv_bias=True

qk_scale=None

drop=0.0

attn_drop=0.0

drop_path=0.05454545468091965

fused_window_process=False

act_layer=<class 'torch.nn.modules.activation.GELU'>

norm_layer=<class 'torch.nn.modules.normalization.LayerNorm'>

dim=384

input_resolution=(14, 14)

num_heads=12

window_size=7

shift_size=0

mlp_ratio=4.0

qkv_bias=True

qk_scale=None

drop=0.0

attn_drop=0.0

drop_path=0.072727270424366

fused_window_process=False

act_layer=<class 'torch.nn.modules.activation.GELU'>

norm_layer=<class 'torch.nn.modules.normalization.LayerNorm'>

dim=384

input_resolution=(14, 14)

num_heads=12

window_size=7

shift_size=3

mlp_ratio=4.0

qkv_bias=True

qk_scale=None

drop=0.0

attn_drop=0.0

drop_path=0.09090908616781235

fused_window_process=False

act_layer=<class 'torch.nn.modules.activation.GELU'>

norm_layer=<class 'torch.nn.modules.normalization.LayerNorm'>

dim=384

input_resolution=(14, 14)

num_heads=12

window_size=7

shift_size=0

mlp_ratio=4.0

qkv_bias=True

qk_scale=None

drop=0.0

attn_drop=0.0

drop_path=0.10909091681241989

fused_window_process=False

act_layer=<class 'torch.nn.modules.activation.GELU'>

norm_layer=<class 'torch.nn.modules.normalization.LayerNorm'>

dim=384

input_resolution=(14, 14)

num_heads=12

window_size=7

shift_size=3

mlp_ratio=4.0

qkv_bias=True

qk_scale=None

drop=0.0

attn_drop=0.0

drop_path=0.12727272510528564

fused_window_process=False

act_layer=<class 'torch.nn.modules.activation.GELU'>

norm_layer=<class 'torch.nn.modules.normalization.LayerNorm'>

dim=384

input_resolution=(14, 14)

num_heads=12

window_size=7

shift_size=0

mlp_ratio=4.0

qkv_bias=True

qk_scale=None

drop=0.0

attn_drop=0.0

drop_path=0.1454545557498932

fused_window_process=False

act_layer=<class 'torch.nn.modules.activation.GELU'>

norm_layer=<class 'torch.nn.modules.normalization.LayerNorm'>

dim=384

input_resolution=(14, 14)

num_heads=12

window_size=7

shift_size=3

mlp_ratio=4.0

qkv_bias=True

qk_scale=None

drop=0.0

attn_drop=0.0

drop_path=0.16363637149333954

fused_window_process=False

act_layer=<class 'torch.nn.modules.activation.GELU'>

norm_layer=<class 'torch.nn.modules.normalization.LayerNorm'>

dim=768

input_resolution=(7, 7)

num_heads=24

window_size=7

shift_size=0

mlp_ratio=4.0

qkv_bias=True

qk_scale=None

drop=0.0

attn_drop=0.0

drop_path=0.1818181872367859

fused_window_process=False

act_layer=<class 'torch.nn.modules.activation.GELU'>

norm_layer=<class 'torch.nn.modules.normalization.LayerNorm'>

dim=768

input_resolution=(7, 7)

num_heads=24

window_size=7

shift_size=3

mlp_ratio=4.0

qkv_bias=True

qk_scale=None

drop=0.0

attn_drop=0.0

drop_path=0.20000000298023224

fused_window_process=False

act_layer=<class 'torch.nn.modules.activation.GELU'>

norm_layer=<class 'torch.nn.modules.normalization.LayerNorm'>

swin transformer中有个窗口的shift体制,我们重点看shift_size这个值。这个值在不同的block上,是在0和3之间取值。

SwinTtansformerBlock中的WindowAttention

class WindowAttention(nn.Module):

r""" Window based multi-head self attention (W-MSA) module with relative position bias.

It supports both of shifted and non-shifted window.

Args:

dim (int): Number of input channels.

window_size (tuple[int]): The height and width of the window.

num_heads (int): Number of attention heads.

qkv_bias (bool, optional): If True, add a learnable bias to query, key, value. Default: True

qk_scale (float | None, optional): Override default qk scale of head_dim ** -0.5 if set

attn_drop (float, optional): Dropout ratio of attention weight. Default: 0.0

proj_drop (float, optional): Dropout ratio of output. Default: 0.0

"""

def __init__(self, dim, window_size, num_heads, qkv_bias=True, qk_scale=None, attn_drop=0., proj_drop=0.):

super().__init__()

self.dim = dim

self.window_size = window_size # Wh, Ww

self.num_heads = num_heads

head_dim = dim // num_heads

self.scale = qk_scale or head_dim ** -0.5

# define a parameter table of relative position bias

self.relative_position_bias_table = nn.Parameter(

torch.zeros((2 * window_size[0] - 1) * (2 * window_size[1] - 1), num_heads)) # 2*Wh-1 * 2*Ww-1, nH

# get pair-wise relative position index for each token inside the window

coords_h = torch.arange(self.window_size[0])

coords_w = torch.arange(self.window_size[1])

coords = torch.stack(torch.meshgrid([coords_h, coords_w])) # 2, Wh, Ww

coords_flatten = torch.flatten(coords, 1) # 2, Wh*Ww

relative_coords = coords_flatten[:, :, None] - coords_flatten[:, None, :] # 2, Wh*Ww, Wh*Ww

relative_coords = relative_coords.permute(1, 2, 0).contiguous() # Wh*Ww, Wh*Ww, 2

relative_coords[:, :, 0] += self.window_size[0] - 1 # shift to start from 0

relative_coords[:, :, 1] += self.window_size[1] - 1

relative_coords[:, :, 0] *= 2 * self.window_size[1] - 1

relative_position_index = relative_coords.sum(-1) # Wh*Ww, Wh*Ww

self.register_buffer("relative_position_index", relative_position_index)

self.qkv = nn.Linear(dim, dim * 3, bias=qkv_bias)

self.attn_drop = nn.Dropout(attn_drop)

self.proj = nn.Linear(dim, dim)

self.proj_drop = nn.Dropout(proj_drop)

trunc_normal_(self.relative_position_bias_table, std=.02)

self.softmax = nn.Softmax(dim=-1)

def forward(self, x, mask=None):

"""

Args:

x: input features with shape of (num_windows*B, N, C)

mask: (0/-inf) mask with shape of (num_windows, Wh*Ww, Wh*Ww) or None

"""

B_, N, C = x.shape

qkv = self.qkv(x).reshape(B_, N, 3, self.num_heads, C // self.num_heads).permute(2, 0, 3, 1, 4)

q, k, v = qkv[0], qkv[1], qkv[2] # make torchscript happy (cannot use tensor as tuple)

q = q * self.scale

attn = (q @ k.transpose(-2, -1))

relative_position_bias = self.relative_position_bias_table[self.relative_position_index.view(-1)].view(

self.window_size[0] * self.window_size[1], self.window_size[0] * self.window_size[1], -1) # Wh*Ww,Wh*Ww,nH

relative_position_bias = relative_position_bias.permute(2, 0, 1).contiguous() # nH, Wh*Ww, Wh*Ww

attn = attn + relative_position_bias.unsqueeze(0)

if mask is not None:

nW = mask.shape[0]

attn = attn.view(B_ // nW, nW, self.num_heads, N, N) + mask.unsqueeze(1).unsqueeze(0)

attn = attn.view(-1, self.num_heads, N, N)

attn = self.softmax(attn)

else:

attn = self.softmax(attn)

attn = self.attn_drop(attn)

x = (attn @ v).transpose(1, 2).reshape(B_, N, C)

x = self.proj(x)

x = self.proj_drop(x)

return x

def extra_repr(self) -> str:

return f'dim={self.dim}, window_size={self.window_size}, num_heads={self.num_heads}'

def flops(self, N):

# calculate flops for 1 window with token length of N

flops = 0

# qkv = self.qkv(x)

flops += N * self.dim * 3 * self.dim

# attn = (q @ k.transpose(-2, -1))

flops += self.num_heads * N * (self.dim // self.num_heads) * N

# x = (attn @ v)

flops += self.num_heads * N * N * (self.dim // self.num_heads)

# x = self.proj(x)

flops += N * self.dim * self.dim

return flops

这段代码实现了一个基于窗口的多头自注意力(Window Multi-Head Self-Attention, W-MSA)模块,主要用于计算图像或序列数据的局部自注意力,广泛应用于视觉任务(例如 Swin Transformer 中)。以下是代码的详细解释:

1. 类的定义与参数说明

类名:WindowAttention

主要功能:

实现基于窗口的多头自注意力机制,支持添加相对位置偏置和窗口移位的功能。

初始化参数:

dim: 输入通道数。window_size: 窗口的大小,通常是一个二维元组(height, width)。num_heads: 注意力头的数量。qkv_bias: 是否在查询(Q)、键(K)、值(V)的线性变换中使用偏置。qk_scale: 可选参数,用于控制查询和键的缩放比例,默认为 ((head_dim)^{-0.5})。attn_drop: 注意力权重的随机失活比率。proj_drop: 输出的随机失活比率。

2. 初始化方法 (__init__)

1) 主要模块的初始化

self.qkv: 用于生成查询、键和值的线性变换矩阵,维度为 (3 \times \text{dim})。self.proj: 用于对注意力输出进行线性变换。self.softmax: Softmax 操作,用于归一化注意力权重。

2) 相对位置偏置的定义

relative_position_bias_table:- 存储相对位置偏置,维度为

((2*window_size[0]-1)*(2*window_size[1]-1), num_heads)。 - 偏置表的大小取决于窗口的宽高(相对位置的最大范围是窗口大小的两倍减一)。

- 存储相对位置偏置,维度为

relative_position_index:- 计算窗口内每对 token 的相对位置索引,用于查找偏置表中的值。

3) 初始化与注册

trunc_normal_: 初始化相对位置偏置表,标准差为0.02。self.register_buffer: 将相对位置索引作为缓冲区,避免梯度更新但可参与计算。

3. 前向传播 (forward)

输入:

x: 输入特征,形状为 ((num_windows \times B, N, C)),其中:- (B): 批量大小。

- (N): 每个窗口的 token 数量(通常是

window_size[0] * window_size[1])。 - (C): 特征维度。

mask: 可选的掩码,用于处理窗口移位操作。

步骤:

- 线性变换生成 Q、K、V:

- 使用

self.qkv对输入特征 (x) 进行线性变换并重塑,生成查询(Q)、键(K)、值(V)。 - (q, k, v) 的形状为 ((B_, num_heads, N, C//num_heads))。

- 使用

- 计算注意力得分:

- (attn = q \cdot k^T),形状为 ((B_, num_heads, N, N))。

- 将

relative_position_bias添加到注意力得分中。

- 掩码操作(可选):

- 如果提供了

mask,将其与注意力得分相加,处理窗口移位的影响。

- 如果提供了

- 归一化与随机失活:

- 使用 Softmax 归一化注意力得分。

- 应用 Dropout 随机失活。

- 计算注意力输出:

- (x = attn \cdot v),然后通过线性变换和随机失活得到最终输出。

4. 计算 FLOPs

方法 flops 用于计算单个窗口的浮点运算量:

- (qkv): (N \times \text{dim} \times 3 \times \text{dim})。

- (attn): (num_heads \times N \times (dim/num_heads) \times N)。

- (attn \cdot v): (num_heads \times N \times N \times (dim/num_heads))。

- (proj): (N \times \text{dim} \times \text{dim})。

5. 总结

核心特点:

- 窗口机制:限制计算范围到局部窗口,减少计算复杂度。

- 相对位置偏置:增强模型对局部位置变化的感知能力。

- 多头注意力:通过多个注意力头捕获不同特征。

应用场景: 通常用于视觉 Transformer(例如 Swin Transformer),适合处理图像数据或其他形式的网格数据。

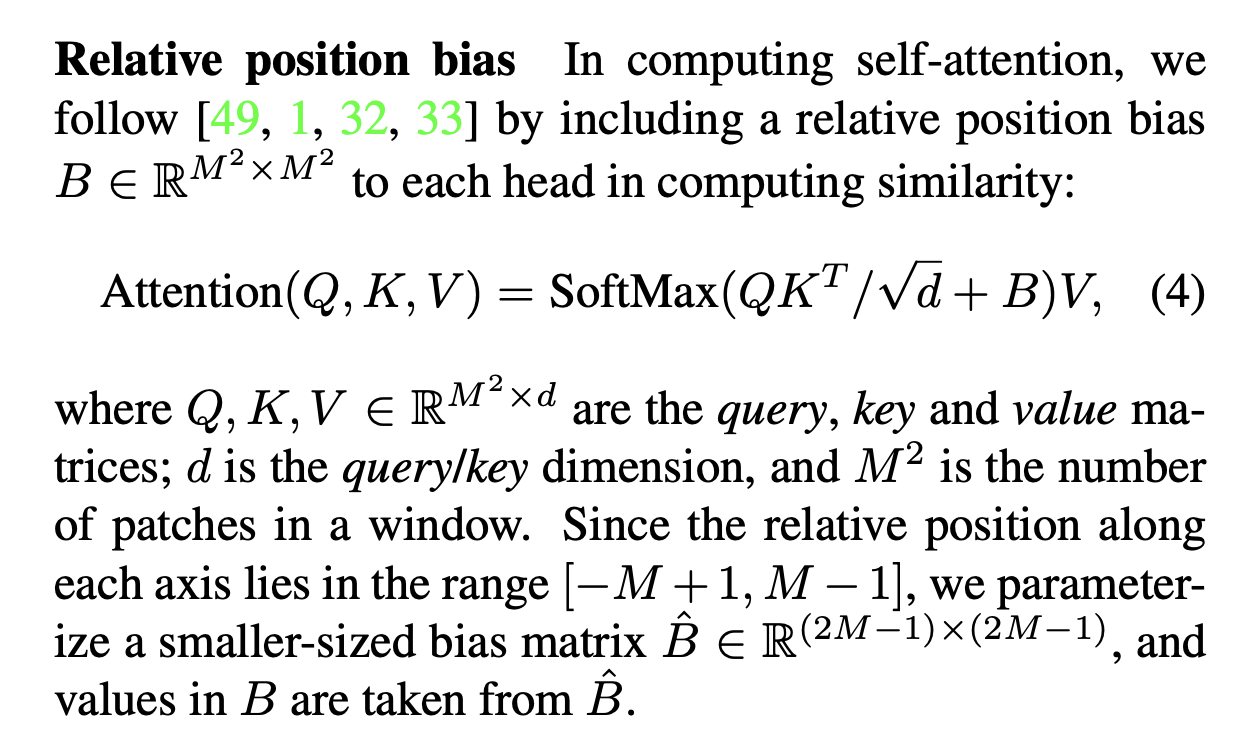

在这里引入相对位置偏置 (Relative Position Bias) 的主要目的是提升模型的位置感知能力,尤其是在局部窗口内,帮助模型更好地捕捉局部区域中像素之间的关系和结构信息。以下是其必要性和作用的详细说明:

1. 绝对位置编码的局限性

传统 Transformer 使用绝对位置编码,通过为每个位置添加固定的向量(如正弦编码)提供位置信息。然而:

- 局部窗口机制的特点:在基于窗口的自注意力中,每个窗口仅包含局部 token 的子集,窗口内部的相对位置比绝对位置更重要,因为窗口的起点可能变化(例如,窗口移位机制)。

- 对位移的不敏感性:绝对位置编码在场景发生平移或窗口内 token 顺序变化时,无法捕捉这种相对关系变化。

2. 相对位置偏置的优势

引入相对位置偏置可以解决上述问题,具有以下优势:

1) 捕捉局部关系

- 相对位置描述局部关系:相对位置偏置基于 token 间的相对距离(例如,左边、右边、上下的偏移量)。

- 对局部模式的敏感性:它能更好地捕捉窗口内的结构模式,如图像中的纹理、边缘等。

2) 对平移的鲁棒性

- 对窗口移动的适应:相对位置偏置只与 token 的相对距离有关,与窗口的绝对位置无关。这样,窗口移位(shift window)时,相对位置关系保持不变,模型的表现更加稳定。

3) 计算效率

- 减少参数依赖:相对位置偏置通过一个表存储,形状为 (((2 * \text{Wh} - 1) \times (2 * \text{Ww} - 1), nH)),与窗口大小和注意力头数相关,而不需要为每个 token 维护独立的位置信息。

3. 在代码中的具体实现

self.relative_position_bias_table = nn.Parameter(

torch.zeros((2 * window_size[0] - 1) * (2 * window_size[1] - 1), num_heads)

)

- 形状解释:

- 假设窗口大小为 ( \text{Wh} \times \text{Ww} ),则窗口内任意两个 token 的相对位置范围为:

- 高度方向:([- (\text{Wh} - 1), +(\text{Wh} - 1)])。

- 宽度方向:([- (\text{Ww} - 1), +(\text{Ww} - 1)])。

- 因此,相对位置总数为 ((2 \times \text{Wh} - 1) \times (2 \times \text{Ww} - 1))。

- 偏置表为一个二维矩阵,存储每个相对位置对应的偏置值,其形状为 ((\text{总相对位置数}, \text{num_heads}))。

- 假设窗口大小为 ( \text{Wh} \times \text{Ww} ),则窗口内任意两个 token 的相对位置范围为:

- 使用方式:

- 在计算注意力分数时,直接查表并将对应的偏置值加到注意力矩阵中:

relative_position_bias = self.relative_position_bias_table[self.relative_position_index.view(-1)].view(...) attn = attn + relative_position_bias.unsqueeze(0)

- 在计算注意力分数时,直接查表并将对应的偏置值加到注意力矩阵中:

4. 总结

相对位置偏置为局部窗口内的自注意力机制提供了有效的位置信息,特别是:

- 增强局部感知能力,更好地捕捉窗口内的结构模式。

- 对平移和窗口移位的鲁棒性,适应性更强。

- 计算高效,通过查表减少了显式编码的复杂性。

这种设计在视觉任务(如 Swin Transformer)中非常关键,因为图像的局部特征对模式识别至关重要。

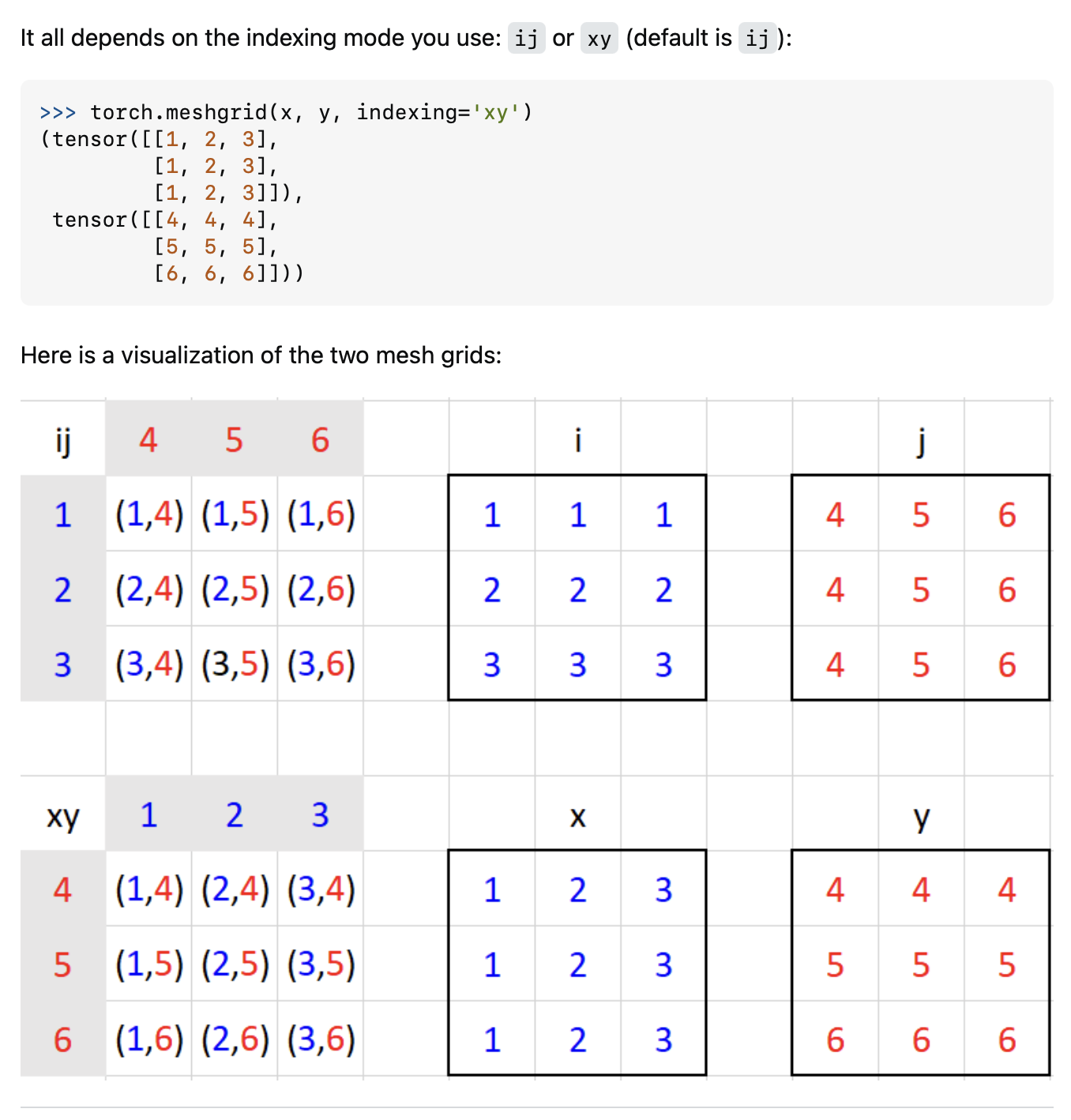

这里介绍一下torch.meshgrid

如果

x = torch.tensor([1, 2, 3])

y = torch.tensor([4, 5, 6])

torch.meshgrid(x, y)

WindowAttention中的相对位置偏置

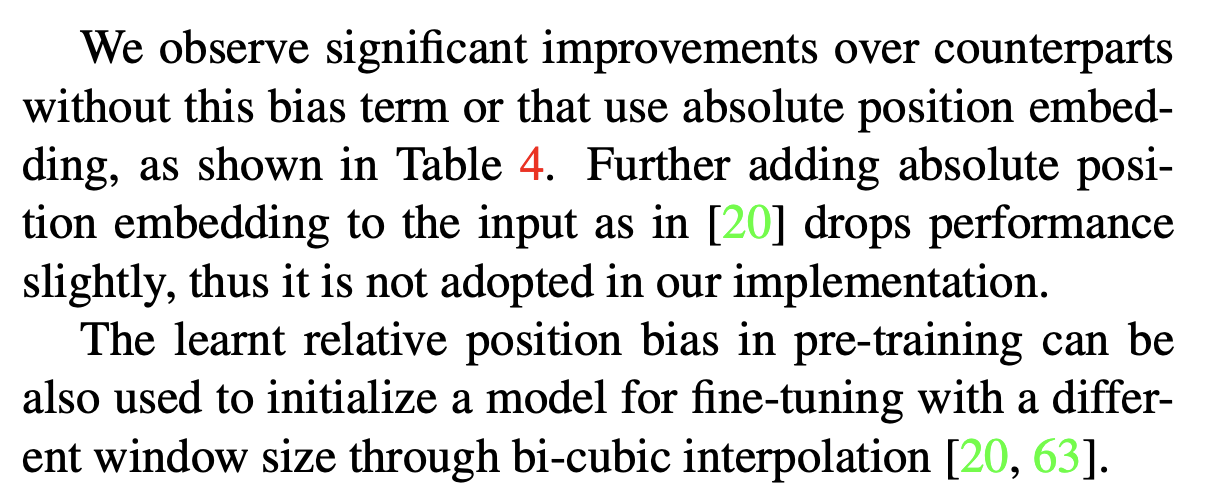

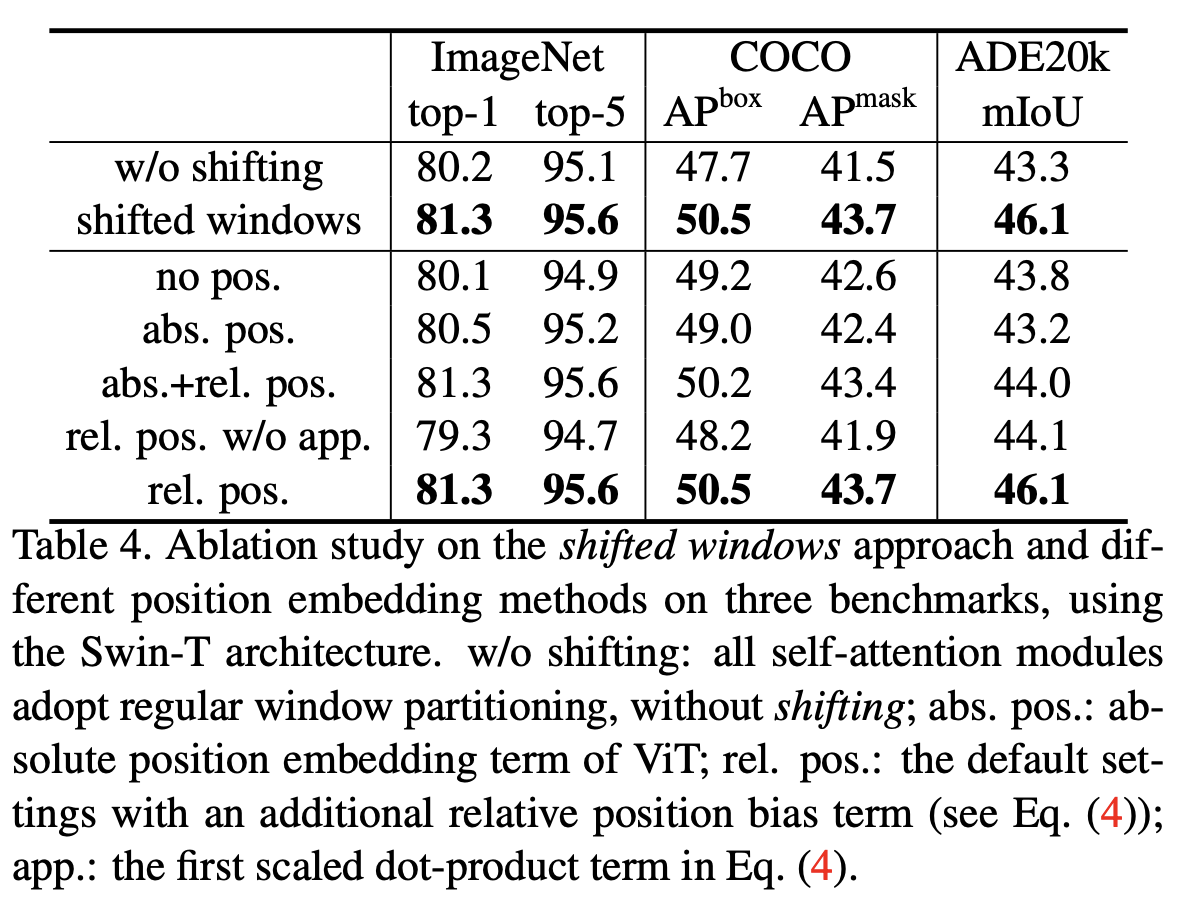

在swin transformer的原文中,关于相对位置偏置(Relative position bias)是这么说的:

Relative position bias可有效的提升网络的效果。

在WindowAttention中,对应的代码为:

# get pair-wise relative position index for each token inside the window

coords_h = torch.arange(self.window_size[0])

coords_w = torch.arange(self.window_size[1])

coords = torch.stack(torch.meshgrid([coords_h, coords_w])) # 2, Wh, Ww

coords_flatten = torch.flatten(coords, 1) # 2, Wh*Ww

relative_coords = coords_flatten[:, :, None] - coords_flatten[:, None, :] # 2, Wh*Ww, Wh*Ww

relative_coords = relative_coords.permute(1, 2, 0).contiguous() # Wh*Ww, Wh*Ww, 2

relative_coords[:, :, 0] += self.window_size[0] - 1 # shift to start from 0

relative_coords[:, :, 1] += self.window_size[1] - 1

relative_coords[:, :, 0] *= 2 * self.window_size[1] - 1

relative_position_index = relative_coords.sum(-1) # Wh*Ww, Wh*Ww

self.register_buffer("relative_position_index", relative_position_index)

我们将其加入打印,看一下数值。

print()

# get pair-wise relative position index for each token inside the window

coords_h = torch.arange(self.window_size[0])

print(f'{coords_h=}')

coords_w = torch.arange(self.window_size[1])

print(f'{coords_w=}')

tmp_val=torch.meshgrid([coords_h, coords_w])

print(f'after meshgrid,{tmp_val=}')

coords = torch.stack(tmp_val) # 2, Wh, Ww

print(f'after stack, {coords=}')

coords_flatten = torch.flatten(coords, 1) # 2, Wh*Ww

print(f'{coords_flatten=}')

print(f'{coords_flatten.shape=}')

print(f'{coords_flatten[:, :, None]=}')

print(f'{coords_flatten[:, :, None].shape=}')

print(f'{coords_flatten[:, None, :]=}')

print(f'{coords_flatten[:, None, :].shape=}')

relative_coords = coords_flatten[:, :, None] - coords_flatten[:, None, :] # 2, Wh*Ww, Wh*Ww

print(f'{relative_coords.shape=}')

print(f'{relative_coords=}')

relative_coords = relative_coords.permute(1, 2, 0).contiguous() # Wh*Ww, Wh*Ww, 2

print(f'after permute, {relative_coords.shape=}')

print(f'after permute, {relative_coords=}')

print(f'{relative_coords[:, :, 0].shape=}')

print(f'{relative_coords[:, :, 0]=}')

print(f'{relative_coords[:, :, 1].shape=}')

print(f'{relative_coords[:, :, 1]=}')

# print(f'{relative_coords[:, :, 0]=}')

relative_coords[:, :, 0] += self.window_size[0] - 1 # shift to start from 0

print(f'after add window size,{relative_coords[:, :, 0]=}')

relative_coords[:, :, 1] += self.window_size[1] - 1

print(f'after add window size,{relative_coords[:, :, 1]=}')

relative_coords[:, :, 0] *= 2 * self.window_size[1] - 1

print(f'after multi window size,{relative_coords[:, :, 0]=}')

relative_position_index = relative_coords.sum(-1) # Wh*Ww, Wh*Ww

print(f'{relative_position_index.shape=}')

print(f'{relative_position_index=}')

self.register_buffer("relative_position_index", relative_position_index)

结果为:

coords_h=tensor([0, 1, 2, 3, 4, 5, 6])

coords_w=tensor([0, 1, 2, 3, 4, 5, 6])

after meshgrid,tmp_val=(tensor([[0, 0, 0, 0, 0, 0, 0],

[1, 1, 1, 1, 1, 1, 1],

[2, 2, 2, 2, 2, 2, 2],

[3, 3, 3, 3, 3, 3, 3],

[4, 4, 4, 4, 4, 4, 4],

[5, 5, 5, 5, 5, 5, 5],

[6, 6, 6, 6, 6, 6, 6]]), tensor([[0, 1, 2, 3, 4, 5, 6],

[0, 1, 2, 3, 4, 5, 6],

[0, 1, 2, 3, 4, 5, 6],

[0, 1, 2, 3, 4, 5, 6],

[0, 1, 2, 3, 4, 5, 6],

[0, 1, 2, 3, 4, 5, 6],

[0, 1, 2, 3, 4, 5, 6]]))

after stack, coords=tensor([[[0, 0, 0, 0, 0, 0, 0],

[1, 1, 1, 1, 1, 1, 1],

[2, 2, 2, 2, 2, 2, 2],

[3, 3, 3, 3, 3, 3, 3],

[4, 4, 4, 4, 4, 4, 4],

[5, 5, 5, 5, 5, 5, 5],

[6, 6, 6, 6, 6, 6, 6]],

[[0, 1, 2, 3, 4, 5, 6],

[0, 1, 2, 3, 4, 5, 6],

[0, 1, 2, 3, 4, 5, 6],

[0, 1, 2, 3, 4, 5, 6],

[0, 1, 2, 3, 4, 5, 6],

[0, 1, 2, 3, 4, 5, 6],

[0, 1, 2, 3, 4, 5, 6]]])

coords_flatten=tensor([[0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 2, 2, 2, 2, 2, 2, 2, 3, 3, 3,

3, 3, 3, 3, 4, 4, 4, 4, 4, 4, 4, 5, 5, 5, 5, 5, 5, 5, 6, 6, 6, 6, 6, 6,

6],

[0, 1, 2, 3, 4, 5, 6, 0, 1, 2, 3, 4, 5, 6, 0, 1, 2, 3, 4, 5, 6, 0, 1, 2,

3, 4, 5, 6, 0, 1, 2, 3, 4, 5, 6, 0, 1, 2, 3, 4, 5, 6, 0, 1, 2, 3, 4, 5,

6]])

coords_flatten.shape=torch.Size([2, 49])

coords_flatten[:, :, None]=tensor([[[0],

[0],

[0],

[0],

[0],

[0],

[0],

[1],

[1],

[1],

[1],

[1],

[1],

[1],

[2],

[2],

[2],

[2],

[2],

[2],

[2],

[3],

[3],

[3],

[3],

[3],

[3],

[3],

[4],

[4],

[4],

[4],

[4],

[4],

[4],

[5],

[5],

[5],

[5],

[5],

[5],

[5],

[6],

[6],

[6],

[6],

[6],

[6],

[6]],

[[0],

[1],

[2],

[3],

[4],

[5],

[6],

[0],

[1],

[2],

[3],

[4],

[5],

[6],

[0],

[1],

[2],

[3],

[4],

[5],

[6],

[0],

[1],

[2],

[3],

[4],

[5],

[6],

[0],

[1],

[2],

[3],

[4],

[5],

[6],

[0],

[1],

[2],

[3],

[4],

[5],

[6],

[0],

[1],

[2],

[3],

[4],

[5],

[6]]])

coords_flatten[:, :, None].shape=torch.Size([2, 49, 1])

coords_flatten[:, None, :]=tensor([[[0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 2, 2, 2, 2, 2, 2, 2, 3, 3,

3, 3, 3, 3, 3, 4, 4, 4, 4, 4, 4, 4, 5, 5, 5, 5, 5, 5, 5, 6, 6, 6, 6,

6, 6, 6]],

[[0, 1, 2, 3, 4, 5, 6, 0, 1, 2, 3, 4, 5, 6, 0, 1, 2, 3, 4, 5, 6, 0, 1,

2, 3, 4, 5, 6, 0, 1, 2, 3, 4, 5, 6, 0, 1, 2, 3, 4, 5, 6, 0, 1, 2, 3,

4, 5, 6]]])

coords_flatten[:, None, :].shape=torch.Size([2, 1, 49])

relative_coords.shape=torch.Size([2, 49, 49])

relative_coords=tensor([[[ 0, 0, 0, 0, 0, 0, 0, -1, -1, -1, -1, -1, -1, -1, -2, -2, -2,

-2, -2, -2, -2, -3, -3, -3, -3, -3, -3, -3, -4, -4, -4, -4, -4, -4,

-4, -5, -5, -5, -5, -5, -5, -5, -6, -6, -6, -6, -6, -6, -6],

[ 0, 0, 0, 0, 0, 0, 0, -1, -1, -1, -1, -1, -1, -1, -2, -2, -2,

-2, -2, -2, -2, -3, -3, -3, -3, -3, -3, -3, -4, -4, -4, -4, -4, -4,

-4, -5, -5, -5, -5, -5, -5, -5, -6, -6, -6, -6, -6, -6, -6],

[ 0, 0, 0, 0, 0, 0, 0, -1, -1, -1, -1, -1, -1, -1, -2, -2, -2,

-2, -2, -2, -2, -3, -3, -3, -3, -3, -3, -3, -4, -4, -4, -4, -4, -4,

-4, -5, -5, -5, -5, -5, -5, -5, -6, -6, -6, -6, -6, -6, -6],

[ 0, 0, 0, 0, 0, 0, 0, -1, -1, -1, -1, -1, -1, -1, -2, -2, -2,

-2, -2, -2, -2, -3, -3, -3, -3, -3, -3, -3, -4, -4, -4, -4, -4, -4,

-4, -5, -5, -5, -5, -5, -5, -5, -6, -6, -6, -6, -6, -6, -6],

[ 0, 0, 0, 0, 0, 0, 0, -1, -1, -1, -1, -1, -1, -1, -2, -2, -2,

-2, -2, -2, -2, -3, -3, -3, -3, -3, -3, -3, -4, -4, -4, -4, -4, -4,

-4, -5, -5, -5, -5, -5, -5, -5, -6, -6, -6, -6, -6, -6, -6],

[ 0, 0, 0, 0, 0, 0, 0, -1, -1, -1, -1, -1, -1, -1, -2, -2, -2,

-2, -2, -2, -2, -3, -3, -3, -3, -3, -3, -3, -4, -4, -4, -4, -4, -4,

-4, -5, -5, -5, -5, -5, -5, -5, -6, -6, -6, -6, -6, -6, -6],

[ 0, 0, 0, 0, 0, 0, 0, -1, -1, -1, -1, -1, -1, -1, -2, -2, -2,

-2, -2, -2, -2, -3, -3, -3, -3, -3, -3, -3, -4, -4, -4, -4, -4, -4,

-4, -5, -5, -5, -5, -5, -5, -5, -6, -6, -6, -6, -6, -6, -6],

[ 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, -1, -1, -1,

-1, -1, -1, -1, -2, -2, -2, -2, -2, -2, -2, -3, -3, -3, -3, -3, -3,

-3, -4, -4, -4, -4, -4, -4, -4, -5, -5, -5, -5, -5, -5, -5],

[ 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, -1, -1, -1,

-1, -1, -1, -1, -2, -2, -2, -2, -2, -2, -2, -3, -3, -3, -3, -3, -3,

-3, -4, -4, -4, -4, -4, -4, -4, -5, -5, -5, -5, -5, -5, -5],

[ 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, -1, -1, -1,

-1, -1, -1, -1, -2, -2, -2, -2, -2, -2, -2, -3, -3, -3, -3, -3, -3,

-3, -4, -4, -4, -4, -4, -4, -4, -5, -5, -5, -5, -5, -5, -5],

[ 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, -1, -1, -1,

-1, -1, -1, -1, -2, -2, -2, -2, -2, -2, -2, -3, -3, -3, -3, -3, -3,

-3, -4, -4, -4, -4, -4, -4, -4, -5, -5, -5, -5, -5, -5, -5],

[ 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, -1, -1, -1,

-1, -1, -1, -1, -2, -2, -2, -2, -2, -2, -2, -3, -3, -3, -3, -3, -3,

-3, -4, -4, -4, -4, -4, -4, -4, -5, -5, -5, -5, -5, -5, -5],

[ 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, -1, -1, -1,

-1, -1, -1, -1, -2, -2, -2, -2, -2, -2, -2, -3, -3, -3, -3, -3, -3,

-3, -4, -4, -4, -4, -4, -4, -4, -5, -5, -5, -5, -5, -5, -5],

[ 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, -1, -1, -1,

-1, -1, -1, -1, -2, -2, -2, -2, -2, -2, -2, -3, -3, -3, -3, -3, -3,

-3, -4, -4, -4, -4, -4, -4, -4, -5, -5, -5, -5, -5, -5, -5],

[ 2, 2, 2, 2, 2, 2, 2, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0,

0, 0, 0, 0, -1, -1, -1, -1, -1, -1, -1, -2, -2, -2, -2, -2, -2,

-2, -3, -3, -3, -3, -3, -3, -3, -4, -4, -4, -4, -4, -4, -4],

[ 2, 2, 2, 2, 2, 2, 2, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0,

0, 0, 0, 0, -1, -1, -1, -1, -1, -1, -1, -2, -2, -2, -2, -2, -2,

-2, -3, -3, -3, -3, -3, -3, -3, -4, -4, -4, -4, -4, -4, -4],

[ 2, 2, 2, 2, 2, 2, 2, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0,

0, 0, 0, 0, -1, -1, -1, -1, -1, -1, -1, -2, -2, -2, -2, -2, -2,

-2, -3, -3, -3, -3, -3, -3, -3, -4, -4, -4, -4, -4, -4, -4],

[ 2, 2, 2, 2, 2, 2, 2, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0,

0, 0, 0, 0, -1, -1, -1, -1, -1, -1, -1, -2, -2, -2, -2, -2, -2,

-2, -3, -3, -3, -3, -3, -3, -3, -4, -4, -4, -4, -4, -4, -4],

[ 2, 2, 2, 2, 2, 2, 2, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0,

0, 0, 0, 0, -1, -1, -1, -1, -1, -1, -1, -2, -2, -2, -2, -2, -2,

-2, -3, -3, -3, -3, -3, -3, -3, -4, -4, -4, -4, -4, -4, -4],

[ 2, 2, 2, 2, 2, 2, 2, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0,

0, 0, 0, 0, -1, -1, -1, -1, -1, -1, -1, -2, -2, -2, -2, -2, -2,

-2, -3, -3, -3, -3, -3, -3, -3, -4, -4, -4, -4, -4, -4, -4],

[ 2, 2, 2, 2, 2, 2, 2, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0,

0, 0, 0, 0, -1, -1, -1, -1, -1, -1, -1, -2, -2, -2, -2, -2, -2,

-2, -3, -3, -3, -3, -3, -3, -3, -4, -4, -4, -4, -4, -4, -4],

[ 3, 3, 3, 3, 3, 3, 3, 2, 2, 2, 2, 2, 2, 2, 1, 1, 1,

1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, -1, -1, -1, -1, -1, -1,

-1, -2, -2, -2, -2, -2, -2, -2, -3, -3, -3, -3, -3, -3, -3],

[ 3, 3, 3, 3, 3, 3, 3, 2, 2, 2, 2, 2, 2, 2, 1, 1, 1,

1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, -1, -1, -1, -1, -1, -1,

-1, -2, -2, -2, -2, -2, -2, -2, -3, -3, -3, -3, -3, -3, -3],

[ 3, 3, 3, 3, 3, 3, 3, 2, 2, 2, 2, 2, 2, 2, 1, 1, 1,

1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, -1, -1, -1, -1, -1, -1,

-1, -2, -2, -2, -2, -2, -2, -2, -3, -3, -3, -3, -3, -3, -3],

[ 3, 3, 3, 3, 3, 3, 3, 2, 2, 2, 2, 2, 2, 2, 1, 1, 1,

1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, -1, -1, -1, -1, -1, -1,

-1, -2, -2, -2, -2, -2, -2, -2, -3, -3, -3, -3, -3, -3, -3],

[ 3, 3, 3, 3, 3, 3, 3, 2, 2, 2, 2, 2, 2, 2, 1, 1, 1,

1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, -1, -1, -1, -1, -1, -1,

-1, -2, -2, -2, -2, -2, -2, -2, -3, -3, -3, -3, -3, -3, -3],

[ 3, 3, 3, 3, 3, 3, 3, 2, 2, 2, 2, 2, 2, 2, 1, 1, 1,

1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, -1, -1, -1, -1, -1, -1,

-1, -2, -2, -2, -2, -2, -2, -2, -3, -3, -3, -3, -3, -3, -3],

[ 3, 3, 3, 3, 3, 3, 3, 2, 2, 2, 2, 2, 2, 2, 1, 1, 1,

1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, -1, -1, -1, -1, -1, -1,

-1, -2, -2, -2, -2, -2, -2, -2, -3, -3, -3, -3, -3, -3, -3],

[ 4, 4, 4, 4, 4, 4, 4, 3, 3, 3, 3, 3, 3, 3, 2, 2, 2,

2, 2, 2, 2, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0,

0, -1, -1, -1, -1, -1, -1, -1, -2, -2, -2, -2, -2, -2, -2],

[ 4, 4, 4, 4, 4, 4, 4, 3, 3, 3, 3, 3, 3, 3, 2, 2, 2,

2, 2, 2, 2, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0,

0, -1, -1, -1, -1, -1, -1, -1, -2, -2, -2, -2, -2, -2, -2],

[ 4, 4, 4, 4, 4, 4, 4, 3, 3, 3, 3, 3, 3, 3, 2, 2, 2,

2, 2, 2, 2, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0,

0, -1, -1, -1, -1, -1, -1, -1, -2, -2, -2, -2, -2, -2, -2],

[ 4, 4, 4, 4, 4, 4, 4, 3, 3, 3, 3, 3, 3, 3, 2, 2, 2,

2, 2, 2, 2, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0,

0, -1, -1, -1, -1, -1, -1, -1, -2, -2, -2, -2, -2, -2, -2],

[ 4, 4, 4, 4, 4, 4, 4, 3, 3, 3, 3, 3, 3, 3, 2, 2, 2,

2, 2, 2, 2, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0,

0, -1, -1, -1, -1, -1, -1, -1, -2, -2, -2, -2, -2, -2, -2],

[ 4, 4, 4, 4, 4, 4, 4, 3, 3, 3, 3, 3, 3, 3, 2, 2, 2,

2, 2, 2, 2, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0,

0, -1, -1, -1, -1, -1, -1, -1, -2, -2, -2, -2, -2, -2, -2],

[ 4, 4, 4, 4, 4, 4, 4, 3, 3, 3, 3, 3, 3, 3, 2, 2, 2,

2, 2, 2, 2, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0,

0, -1, -1, -1, -1, -1, -1, -1, -2, -2, -2, -2, -2, -2, -2],

[ 5, 5, 5, 5, 5, 5, 5, 4, 4, 4, 4, 4, 4, 4, 3, 3, 3,

3, 3, 3, 3, 2, 2, 2, 2, 2, 2, 2, 1, 1, 1, 1, 1, 1,

1, 0, 0, 0, 0, 0, 0, 0, -1, -1, -1, -1, -1, -1, -1],

[ 5, 5, 5, 5, 5, 5, 5, 4, 4, 4, 4, 4, 4, 4, 3, 3, 3,

3, 3, 3, 3, 2, 2, 2, 2, 2, 2, 2, 1, 1, 1, 1, 1, 1,

1, 0, 0, 0, 0, 0, 0, 0, -1, -1, -1, -1, -1, -1, -1],

[ 5, 5, 5, 5, 5, 5, 5, 4, 4, 4, 4, 4, 4, 4, 3, 3, 3,

3, 3, 3, 3, 2, 2, 2, 2, 2, 2, 2, 1, 1, 1, 1, 1, 1,

1, 0, 0, 0, 0, 0, 0, 0, -1, -1, -1, -1, -1, -1, -1],

[ 5, 5, 5, 5, 5, 5, 5, 4, 4, 4, 4, 4, 4, 4, 3, 3, 3,

3, 3, 3, 3, 2, 2, 2, 2, 2, 2, 2, 1, 1, 1, 1, 1, 1,

1, 0, 0, 0, 0, 0, 0, 0, -1, -1, -1, -1, -1, -1, -1],

[ 5, 5, 5, 5, 5, 5, 5, 4, 4, 4, 4, 4, 4, 4, 3, 3, 3,

3, 3, 3, 3, 2, 2, 2, 2, 2, 2, 2, 1, 1, 1, 1, 1, 1,

1, 0, 0, 0, 0, 0, 0, 0, -1, -1, -1, -1, -1, -1, -1],

[ 5, 5, 5, 5, 5, 5, 5, 4, 4, 4, 4, 4, 4, 4, 3, 3, 3,

3, 3, 3, 3, 2, 2, 2, 2, 2, 2, 2, 1, 1, 1, 1, 1, 1,

1, 0, 0, 0, 0, 0, 0, 0, -1, -1, -1, -1, -1, -1, -1],

[ 5, 5, 5, 5, 5, 5, 5, 4, 4, 4, 4, 4, 4, 4, 3, 3, 3,

3, 3, 3, 3, 2, 2, 2, 2, 2, 2, 2, 1, 1, 1, 1, 1, 1,

1, 0, 0, 0, 0, 0, 0, 0, -1, -1, -1, -1, -1, -1, -1],

[ 6, 6, 6, 6, 6, 6, 6, 5, 5, 5, 5, 5, 5, 5, 4, 4, 4,

4, 4, 4, 4, 3, 3, 3, 3, 3, 3, 3, 2, 2, 2, 2, 2, 2,

2, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0],

[ 6, 6, 6, 6, 6, 6, 6, 5, 5, 5, 5, 5, 5, 5, 4, 4, 4,

4, 4, 4, 4, 3, 3, 3, 3, 3, 3, 3, 2, 2, 2, 2, 2, 2,

2, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0],

[ 6, 6, 6, 6, 6, 6, 6, 5, 5, 5, 5, 5, 5, 5, 4, 4, 4,

4, 4, 4, 4, 3, 3, 3, 3, 3, 3, 3, 2, 2, 2, 2, 2, 2,

2, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0],

[ 6, 6, 6, 6, 6, 6, 6, 5, 5, 5, 5, 5, 5, 5, 4, 4, 4,

4, 4, 4, 4, 3, 3, 3, 3, 3, 3, 3, 2, 2, 2, 2, 2, 2,

2, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0],

[ 6, 6, 6, 6, 6, 6, 6, 5, 5, 5, 5, 5, 5, 5, 4, 4, 4,

4, 4, 4, 4, 3, 3, 3, 3, 3, 3, 3, 2, 2, 2, 2, 2, 2,

2, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0],

[ 6, 6, 6, 6, 6, 6, 6, 5, 5, 5, 5, 5, 5, 5, 4, 4, 4,

4, 4, 4, 4, 3, 3, 3, 3, 3, 3, 3, 2, 2, 2, 2, 2, 2,

2, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0],

[ 6, 6, 6, 6, 6, 6, 6, 5, 5, 5, 5, 5, 5, 5, 4, 4, 4,

4, 4, 4, 4, 3, 3, 3, 3, 3, 3, 3, 2, 2, 2, 2, 2, 2,

2, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0]],

[[ 0, -1, -2, -3, -4, -5, -6, 0, -1, -2, -3, -4, -5, -6, 0, -1, -2,

-3, -4, -5, -6, 0, -1, -2, -3, -4, -5, -6, 0, -1, -2, -3, -4, -5,

-6, 0, -1, -2, -3, -4, -5, -6, 0, -1, -2, -3, -4, -5, -6],

[ 1, 0, -1, -2, -3, -4, -5, 1, 0, -1, -2, -3, -4, -5, 1, 0, -1,

-2, -3, -4, -5, 1, 0, -1, -2, -3, -4, -5, 1, 0, -1, -2, -3, -4,

-5, 1, 0, -1, -2, -3, -4, -5, 1, 0, -1, -2, -3, -4, -5],

[ 2, 1, 0, -1, -2, -3, -4, 2, 1, 0, -1, -2, -3, -4, 2, 1, 0,

-1, -2, -3, -4, 2, 1, 0, -1, -2, -3, -4, 2, 1, 0, -1, -2, -3,

-4, 2, 1, 0, -1, -2, -3, -4, 2, 1, 0, -1, -2, -3, -4],

[ 3, 2, 1, 0, -1, -2, -3, 3, 2, 1, 0, -1, -2, -3, 3, 2, 1,

0, -1, -2, -3, 3, 2, 1, 0, -1, -2, -3, 3, 2, 1, 0, -1, -2,

-3, 3, 2, 1, 0, -1, -2, -3, 3, 2, 1, 0, -1, -2, -3],

[ 4, 3, 2, 1, 0, -1, -2, 4, 3, 2, 1, 0, -1, -2, 4, 3, 2,

1, 0, -1, -2, 4, 3, 2, 1, 0, -1, -2, 4, 3, 2, 1, 0, -1,

-2, 4, 3, 2, 1, 0, -1, -2, 4, 3, 2, 1, 0, -1, -2],

[ 5, 4, 3, 2, 1, 0, -1, 5, 4, 3, 2, 1, 0, -1, 5, 4, 3,

2, 1, 0, -1, 5, 4, 3, 2, 1, 0, -1, 5, 4, 3, 2, 1, 0,

-1, 5, 4, 3, 2, 1, 0, -1, 5, 4, 3, 2, 1, 0, -1],

[ 6, 5, 4, 3, 2, 1, 0, 6, 5, 4, 3, 2, 1, 0, 6, 5, 4,

3, 2, 1, 0, 6, 5, 4, 3, 2, 1, 0, 6, 5, 4, 3, 2, 1,

0, 6, 5, 4, 3, 2, 1, 0, 6, 5, 4, 3, 2, 1, 0],

[ 0, -1, -2, -3, -4, -5, -6, 0, -1, -2, -3, -4, -5, -6, 0, -1, -2,

-3, -4, -5, -6, 0, -1, -2, -3, -4, -5, -6, 0, -1, -2, -3, -4, -5,

-6, 0, -1, -2, -3, -4, -5, -6, 0, -1, -2, -3, -4, -5, -6],

[ 1, 0, -1, -2, -3, -4, -5, 1, 0, -1, -2, -3, -4, -5, 1, 0, -1,

-2, -3, -4, -5, 1, 0, -1, -2, -3, -4, -5, 1, 0, -1, -2, -3, -4,

-5, 1, 0, -1, -2, -3, -4, -5, 1, 0, -1, -2, -3, -4, -5],

[ 2, 1, 0, -1, -2, -3, -4, 2, 1, 0, -1, -2, -3, -4, 2, 1, 0,

-1, -2, -3, -4, 2, 1, 0, -1, -2, -3, -4, 2, 1, 0, -1, -2, -3,

-4, 2, 1, 0, -1, -2, -3, -4, 2, 1, 0, -1, -2, -3, -4],

[ 3, 2, 1, 0, -1, -2, -3, 3, 2, 1, 0, -1, -2, -3, 3, 2, 1,

0, -1, -2, -3, 3, 2, 1, 0, -1, -2, -3, 3, 2, 1, 0, -1, -2,

-3, 3, 2, 1, 0, -1, -2, -3, 3, 2, 1, 0, -1, -2, -3],

[ 4, 3, 2, 1, 0, -1, -2, 4, 3, 2, 1, 0, -1, -2, 4, 3, 2,

1, 0, -1, -2, 4, 3, 2, 1, 0, -1, -2, 4, 3, 2, 1, 0, -1,

-2, 4, 3, 2, 1, 0, -1, -2, 4, 3, 2, 1, 0, -1, -2],

[ 5, 4, 3, 2, 1, 0, -1, 5, 4, 3, 2, 1, 0, -1, 5, 4, 3,

2, 1, 0, -1, 5, 4, 3, 2, 1, 0, -1, 5, 4, 3, 2, 1, 0,

-1, 5, 4, 3, 2, 1, 0, -1, 5, 4, 3, 2, 1, 0, -1],

[ 6, 5, 4, 3, 2, 1, 0, 6, 5, 4, 3, 2, 1, 0, 6, 5, 4,

3, 2, 1, 0, 6, 5, 4, 3, 2, 1, 0, 6, 5, 4, 3, 2, 1,

0, 6, 5, 4, 3, 2, 1, 0, 6, 5, 4, 3, 2, 1, 0],

[ 0, -1, -2, -3, -4, -5, -6, 0, -1, -2, -3, -4, -5, -6, 0, -1, -2,

-3, -4, -5, -6, 0, -1, -2, -3, -4, -5, -6, 0, -1, -2, -3, -4, -5,

-6, 0, -1, -2, -3, -4, -5, -6, 0, -1, -2, -3, -4, -5, -6],

[ 1, 0, -1, -2, -3, -4, -5, 1, 0, -1, -2, -3, -4, -5, 1, 0, -1,

-2, -3, -4, -5, 1, 0, -1, -2, -3, -4, -5, 1, 0, -1, -2, -3, -4,

-5, 1, 0, -1, -2, -3, -4, -5, 1, 0, -1, -2, -3, -4, -5],

[ 2, 1, 0, -1, -2, -3, -4, 2, 1, 0, -1, -2, -3, -4, 2, 1, 0,

-1, -2, -3, -4, 2, 1, 0, -1, -2, -3, -4, 2, 1, 0, -1, -2, -3,

-4, 2, 1, 0, -1, -2, -3, -4, 2, 1, 0, -1, -2, -3, -4],

[ 3, 2, 1, 0, -1, -2, -3, 3, 2, 1, 0, -1, -2, -3, 3, 2, 1,

0, -1, -2, -3, 3, 2, 1, 0, -1, -2, -3, 3, 2, 1, 0, -1, -2,

-3, 3, 2, 1, 0, -1, -2, -3, 3, 2, 1, 0, -1, -2, -3],

[ 4, 3, 2, 1, 0, -1, -2, 4, 3, 2, 1, 0, -1, -2, 4, 3, 2,

1, 0, -1, -2, 4, 3, 2, 1, 0, -1, -2, 4, 3, 2, 1, 0, -1,

-2, 4, 3, 2, 1, 0, -1, -2, 4, 3, 2, 1, 0, -1, -2],

[ 5, 4, 3, 2, 1, 0, -1, 5, 4, 3, 2, 1, 0, -1, 5, 4, 3,

2, 1, 0, -1, 5, 4, 3, 2, 1, 0, -1, 5, 4, 3, 2, 1, 0,

-1, 5, 4, 3, 2, 1, 0, -1, 5, 4, 3, 2, 1, 0, -1],

[ 6, 5, 4, 3, 2, 1, 0, 6, 5, 4, 3, 2, 1, 0, 6, 5, 4,

3, 2, 1, 0, 6, 5, 4, 3, 2, 1, 0, 6, 5, 4, 3, 2, 1,

0, 6, 5, 4, 3, 2, 1, 0, 6, 5, 4, 3, 2, 1, 0],

[ 0, -1, -2, -3, -4, -5, -6, 0, -1, -2, -3, -4, -5, -6, 0, -1, -2,

-3, -4, -5, -6, 0, -1, -2, -3, -4, -5, -6, 0, -1, -2, -3, -4, -5,

-6, 0, -1, -2, -3, -4, -5, -6, 0, -1, -2, -3, -4, -5, -6],

[ 1, 0, -1, -2, -3, -4, -5, 1, 0, -1, -2, -3, -4, -5, 1, 0, -1,

-2, -3, -4, -5, 1, 0, -1, -2, -3, -4, -5, 1, 0, -1, -2, -3, -4,

-5, 1, 0, -1, -2, -3, -4, -5, 1, 0, -1, -2, -3, -4, -5],

[ 2, 1, 0, -1, -2, -3, -4, 2, 1, 0, -1, -2, -3, -4, 2, 1, 0,

-1, -2, -3, -4, 2, 1, 0, -1, -2, -3, -4, 2, 1, 0, -1, -2, -3,

-4, 2, 1, 0, -1, -2, -3, -4, 2, 1, 0, -1, -2, -3, -4],

[ 3, 2, 1, 0, -1, -2, -3, 3, 2, 1, 0, -1, -2, -3, 3, 2, 1,

0, -1, -2, -3, 3, 2, 1, 0, -1, -2, -3, 3, 2, 1, 0, -1, -2,

-3, 3, 2, 1, 0, -1, -2, -3, 3, 2, 1, 0, -1, -2, -3],

[ 4, 3, 2, 1, 0, -1, -2, 4, 3, 2, 1, 0, -1, -2, 4, 3, 2,

1, 0, -1, -2, 4, 3, 2, 1, 0, -1, -2, 4, 3, 2, 1, 0, -1,

-2, 4, 3, 2, 1, 0, -1, -2, 4, 3, 2, 1, 0, -1, -2],

[ 5, 4, 3, 2, 1, 0, -1, 5, 4, 3, 2, 1, 0, -1, 5, 4, 3,

2, 1, 0, -1, 5, 4, 3, 2, 1, 0, -1, 5, 4, 3, 2, 1, 0,

-1, 5, 4, 3, 2, 1, 0, -1, 5, 4, 3, 2, 1, 0, -1],

[ 6, 5, 4, 3, 2, 1, 0, 6, 5, 4, 3, 2, 1, 0, 6, 5, 4,

3, 2, 1, 0, 6, 5, 4, 3, 2, 1, 0, 6, 5, 4, 3, 2, 1,

0, 6, 5, 4, 3, 2, 1, 0, 6, 5, 4, 3, 2, 1, 0],

[ 0, -1, -2, -3, -4, -5, -6, 0, -1, -2, -3, -4, -5, -6, 0, -1, -2,

-3, -4, -5, -6, 0, -1, -2, -3, -4, -5, -6, 0, -1, -2, -3, -4, -5,

-6, 0, -1, -2, -3, -4, -5, -6, 0, -1, -2, -3, -4, -5, -6],

[ 1, 0, -1, -2, -3, -4, -5, 1, 0, -1, -2, -3, -4, -5, 1, 0, -1,

-2, -3, -4, -5, 1, 0, -1, -2, -3, -4, -5, 1, 0, -1, -2, -3, -4,

-5, 1, 0, -1, -2, -3, -4, -5, 1, 0, -1, -2, -3, -4, -5],

[ 2, 1, 0, -1, -2, -3, -4, 2, 1, 0, -1, -2, -3, -4, 2, 1, 0,

-1, -2, -3, -4, 2, 1, 0, -1, -2, -3, -4, 2, 1, 0, -1, -2, -3,

-4, 2, 1, 0, -1, -2, -3, -4, 2, 1, 0, -1, -2, -3, -4],

[ 3, 2, 1, 0, -1, -2, -3, 3, 2, 1, 0, -1, -2, -3, 3, 2, 1,

0, -1, -2, -3, 3, 2, 1, 0, -1, -2, -3, 3, 2, 1, 0, -1, -2,

-3, 3, 2, 1, 0, -1, -2, -3, 3, 2, 1, 0, -1, -2, -3],

[ 4, 3, 2, 1, 0, -1, -2, 4, 3, 2, 1, 0, -1, -2, 4, 3, 2,

1, 0, -1, -2, 4, 3, 2, 1, 0, -1, -2, 4, 3, 2, 1, 0, -1,

-2, 4, 3, 2, 1, 0, -1, -2, 4, 3, 2, 1, 0, -1, -2],

[ 5, 4, 3, 2, 1, 0, -1, 5, 4, 3, 2, 1, 0, -1, 5, 4, 3,

2, 1, 0, -1, 5, 4, 3, 2, 1, 0, -1, 5, 4, 3, 2, 1, 0,

-1, 5, 4, 3, 2, 1, 0, -1, 5, 4, 3, 2, 1, 0, -1],

[ 6, 5, 4, 3, 2, 1, 0, 6, 5, 4, 3, 2, 1, 0, 6, 5, 4,

3, 2, 1, 0, 6, 5, 4, 3, 2, 1, 0, 6, 5, 4, 3, 2, 1,

0, 6, 5, 4, 3, 2, 1, 0, 6, 5, 4, 3, 2, 1, 0],

[ 0, -1, -2, -3, -4, -5, -6, 0, -1, -2, -3, -4, -5, -6, 0, -1, -2,

-3, -4, -5, -6, 0, -1, -2, -3, -4, -5, -6, 0, -1, -2, -3, -4, -5,

-6, 0, -1, -2, -3, -4, -5, -6, 0, -1, -2, -3, -4, -5, -6],

[ 1, 0, -1, -2, -3, -4, -5, 1, 0, -1, -2, -3, -4, -5, 1, 0, -1,

-2, -3, -4, -5, 1, 0, -1, -2, -3, -4, -5, 1, 0, -1, -2, -3, -4,

-5, 1, 0, -1, -2, -3, -4, -5, 1, 0, -1, -2, -3, -4, -5],

[ 2, 1, 0, -1, -2, -3, -4, 2, 1, 0, -1, -2, -3, -4, 2, 1, 0,

-1, -2, -3, -4, 2, 1, 0, -1, -2, -3, -4, 2, 1, 0, -1, -2, -3,

-4, 2, 1, 0, -1, -2, -3, -4, 2, 1, 0, -1, -2, -3, -4],

[ 3, 2, 1, 0, -1, -2, -3, 3, 2, 1, 0, -1, -2, -3, 3, 2, 1,

0, -1, -2, -3, 3, 2, 1, 0, -1, -2, -3, 3, 2, 1, 0, -1, -2,

-3, 3, 2, 1, 0, -1, -2, -3, 3, 2, 1, 0, -1, -2, -3],

[ 4, 3, 2, 1, 0, -1, -2, 4, 3, 2, 1, 0, -1, -2, 4, 3, 2,

1, 0, -1, -2, 4, 3, 2, 1, 0, -1, -2, 4, 3, 2, 1, 0, -1,

-2, 4, 3, 2, 1, 0, -1, -2, 4, 3, 2, 1, 0, -1, -2],

[ 5, 4, 3, 2, 1, 0, -1, 5, 4, 3, 2, 1, 0, -1, 5, 4, 3,

2, 1, 0, -1, 5, 4, 3, 2, 1, 0, -1, 5, 4, 3, 2, 1, 0,

-1, 5, 4, 3, 2, 1, 0, -1, 5, 4, 3, 2, 1, 0, -1],

[ 6, 5, 4, 3, 2, 1, 0, 6, 5, 4, 3, 2, 1, 0, 6, 5, 4,

3, 2, 1, 0, 6, 5, 4, 3, 2, 1, 0, 6, 5, 4, 3, 2, 1,

0, 6, 5, 4, 3, 2, 1, 0, 6, 5, 4, 3, 2, 1, 0],

[ 0, -1, -2, -3, -4, -5, -6, 0, -1, -2, -3, -4, -5, -6, 0, -1, -2,

-3, -4, -5, -6, 0, -1, -2, -3, -4, -5, -6, 0, -1, -2, -3, -4, -5,

-6, 0, -1, -2, -3, -4, -5, -6, 0, -1, -2, -3, -4, -5, -6],

[ 1, 0, -1, -2, -3, -4, -5, 1, 0, -1, -2, -3, -4, -5, 1, 0, -1,

-2, -3, -4, -5, 1, 0, -1, -2, -3, -4, -5, 1, 0, -1, -2, -3, -4,

-5, 1, 0, -1, -2, -3, -4, -5, 1, 0, -1, -2, -3, -4, -5],

[ 2, 1, 0, -1, -2, -3, -4, 2, 1, 0, -1, -2, -3, -4, 2, 1, 0,

-1, -2, -3, -4, 2, 1, 0, -1, -2, -3, -4, 2, 1, 0, -1, -2, -3,

-4, 2, 1, 0, -1, -2, -3, -4, 2, 1, 0, -1, -2, -3, -4],

[ 3, 2, 1, 0, -1, -2, -3, 3, 2, 1, 0, -1, -2, -3, 3, 2, 1,

0, -1, -2, -3, 3, 2, 1, 0, -1, -2, -3, 3, 2, 1, 0, -1, -2,

-3, 3, 2, 1, 0, -1, -2, -3, 3, 2, 1, 0, -1, -2, -3],

[ 4, 3, 2, 1, 0, -1, -2, 4, 3, 2, 1, 0, -1, -2, 4, 3, 2,

1, 0, -1, -2, 4, 3, 2, 1, 0, -1, -2, 4, 3, 2, 1, 0, -1,

-2, 4, 3, 2, 1, 0, -1, -2, 4, 3, 2, 1, 0, -1, -2],

[ 5, 4, 3, 2, 1, 0, -1, 5, 4, 3, 2, 1, 0, -1, 5, 4, 3,

2, 1, 0, -1, 5, 4, 3, 2, 1, 0, -1, 5, 4, 3, 2, 1, 0,

-1, 5, 4, 3, 2, 1, 0, -1, 5, 4, 3, 2, 1, 0, -1],

[ 6, 5, 4, 3, 2, 1, 0, 6, 5, 4, 3, 2, 1, 0, 6, 5, 4,

3, 2, 1, 0, 6, 5, 4, 3, 2, 1, 0, 6, 5, 4, 3, 2, 1,

0, 6, 5, 4, 3, 2, 1, 0, 6, 5, 4, 3, 2, 1, 0]]])

after permute, relative_coords.shape=torch.Size([49, 49, 2])

after permute, relative_coords=tensor([[[ 0, 0],

[ 0, -1],

[ 0, -2],

[ 0, -3],

[ 0, -4],

[ 0, -5],

[ 0, -6],

[-1, 0],

[-1, -1],

[-1, -2],

[-1, -3],

[-1, -4],

[-1, -5],

[-1, -6],

[-2, 0],

[-2, -1],

[-2, -2],

[-2, -3],

[-2, -4],

[-2, -5],

[-2, -6],

[-3, 0],

[-3, -1],

[-3, -2],

[-3, -3],

[-3, -4],

[-3, -5],

[-3, -6],

[-4, 0],

[-4, -1],

[-4, -2],

[-4, -3],

[-4, -4],

[-4, -5],

[-4, -6],

[-5, 0],

[-5, -1],

[-5, -2],

[-5, -3],

[-5, -4],

[-5, -5],

[-5, -6],

[-6, 0],

[-6, -1],

[-6, -2],

[-6, -3],

[-6, -4],

[-6, -5],

[-6, -6]],

[[ 0, 1],

[ 0, 0],

[ 0, -1],

[ 0, -2],

[ 0, -3],

[ 0, -4],

[ 0, -5],

[-1, 1],

[-1, 0],

[-1, -1],

[-1, -2],

[-1, -3],

[-1, -4],

[-1, -5],

[-2, 1],

[-2, 0],

[-2, -1],

[-2, -2],

[-2, -3],

[-2, -4],

[-2, -5],

[-3, 1],

[-3, 0],

[-3, -1],

[-3, -2],

[-3, -3],

[-3, -4],

[-3, -5],

[-4, 1],

[-4, 0],

[-4, -1],

[-4, -2],

[-4, -3],

[-4, -4],

[-4, -5],

[-5, 1],

[-5, 0],

[-5, -1],

[-5, -2],

[-5, -3],

[-5, -4],

[-5, -5],

[-6, 1],

[-6, 0],

[-6, -1],

[-6, -2],

[-6, -3],

[-6, -4],

[-6, -5]],

[[ 0, 2],

[ 0, 1],

[ 0, 0],

[ 0, -1],

[ 0, -2],

[ 0, -3],

[ 0, -4],

[-1, 2],

[-1, 1],

[-1, 0],

[-1, -1],

[-1, -2],

[-1, -3],

[-1, -4],

[-2, 2],

[-2, 1],

[-2, 0],

[-2, -1],

[-2, -2],

[-2, -3],

[-2, -4],

[-3, 2],

[-3, 1],

[-3, 0],

[-3, -1],

[-3, -2],

[-3, -3],

[-3, -4],

[-4, 2],

[-4, 1],

[-4, 0],

[-4, -1],

[-4, -2],

[-4, -3],

[-4, -4],

[-5, 2],

[-5, 1],

[-5, 0],

[-5, -1],

[-5, -2],

[-5, -3],

[-5, -4],

[-6, 2],

[-6, 1],

[-6, 0],

[-6, -1],

[-6, -2],

[-6, -3],

[-6, -4]],

[[ 0, 3],

[ 0, 2],

[ 0, 1],

[ 0, 0],

[ 0, -1],

[ 0, -2],

[ 0, -3],

[-1, 3],

[-1, 2],

[-1, 1],

[-1, 0],

[-1, -1],

[-1, -2],

[-1, -3],

[-2, 3],

[-2, 2],

[-2, 1],

[-2, 0],

[-2, -1],

[-2, -2],

[-2, -3],

[-3, 3],

[-3, 2],

[-3, 1],

[-3, 0],

[-3, -1],

[-3, -2],

[-3, -3],

[-4, 3],

[-4, 2],

[-4, 1],

[-4, 0],

[-4, -1],

[-4, -2],

[-4, -3],

[-5, 3],

[-5, 2],

[-5, 1],

[-5, 0],

[-5, -1],

[-5, -2],

[-5, -3],

[-6, 3],

[-6, 2],

[-6, 1],

[-6, 0],

[-6, -1],

[-6, -2],

[-6, -3]],

[[ 0, 4],

[ 0, 3],

[ 0, 2],

[ 0, 1],

[ 0, 0],

[ 0, -1],

[ 0, -2],

[-1, 4],

[-1, 3],

[-1, 2],

[-1, 1],

[-1, 0],

[-1, -1],

[-1, -2],

[-2, 4],

[-2, 3],

[-2, 2],

[-2, 1],

[-2, 0],

[-2, -1],

[-2, -2],

[-3, 4],

[-3, 3],

[-3, 2],

[-3, 1],

[-3, 0],

[-3, -1],

[-3, -2],

[-4, 4],

[-4, 3],

[-4, 2],

[-4, 1],

[-4, 0],

[-4, -1],

[-4, -2],

[-5, 4],

[-5, 3],

[-5, 2],

[-5, 1],

[-5, 0],

[-5, -1],

[-5, -2],

[-6, 4],

[-6, 3],

[-6, 2],

[-6, 1],

[-6, 0],

[-6, -1],

[-6, -2]],

[[ 0, 5],

[ 0, 4],

[ 0, 3],

[ 0, 2],

[ 0, 1],

[ 0, 0],

[ 0, -1],

[-1, 5],

[-1, 4],

[-1, 3],

[-1, 2],

[-1, 1],

[-1, 0],

[-1, -1],

[-2, 5],

[-2, 4],

[-2, 3],

[-2, 2],

[-2, 1],

[-2, 0],

[-2, -1],

[-3, 5],

[-3, 4],

[-3, 3],

[-3, 2],

[-3, 1],

[-3, 0],

[-3, -1],

[-4, 5],

[-4, 4],

[-4, 3],

[-4, 2],

[-4, 1],

[-4, 0],

[-4, -1],

[-5, 5],

[-5, 4],

[-5, 3],

[-5, 2],

[-5, 1],

[-5, 0],

[-5, -1],

[-6, 5],

[-6, 4],

[-6, 3],

[-6, 2],

[-6, 1],

[-6, 0],

[-6, -1]],

[[ 0, 6],

[ 0, 5],

[ 0, 4],

[ 0, 3],

[ 0, 2],

[ 0, 1],

[ 0, 0],

[-1, 6],

[-1, 5],

[-1, 4],

[-1, 3],

[-1, 2],

[-1, 1],

[-1, 0],

[-2, 6],

[-2, 5],

[-2, 4],

[-2, 3],

[-2, 2],

[-2, 1],

[-2, 0],

[-3, 6],

[-3, 5],

[-3, 4],

[-3, 3],

[-3, 2],

[-3, 1],

[-3, 0],

[-4, 6],

[-4, 5],

[-4, 4],

[-4, 3],

[-4, 2],

[-4, 1],

[-4, 0],

[-5, 6],

[-5, 5],

[-5, 4],

[-5, 3],

[-5, 2],

[-5, 1],

[-5, 0],

[-6, 6],

[-6, 5],

[-6, 4],

[-6, 3],

[-6, 2],

[-6, 1],

[-6, 0]],

[[ 1, 0],

[ 1, -1],

[ 1, -2],

[ 1, -3],

[ 1, -4],

[ 1, -5],

[ 1, -6],

[ 0, 0],

[ 0, -1],

[ 0, -2],

[ 0, -3],

[ 0, -4],

[ 0, -5],

[ 0, -6],

[-1, 0],

[-1, -1],

[-1, -2],

[-1, -3],

[-1, -4],

[-1, -5],

[-1, -6],

[-2, 0],

[-2, -1],

[-2, -2],

[-2, -3],

[-2, -4],

[-2, -5],

[-2, -6],

[-3, 0],

[-3, -1],

[-3, -2],

[-3, -3],

[-3, -4],

[-3, -5],

[-3, -6],

[-4, 0],

[-4, -1],

[-4, -2],

[-4, -3],

[-4, -4],

[-4, -5],

[-4, -6],

[-5, 0],

[-5, -1],

[-5, -2],

[-5, -3],

[-5, -4],

[-5, -5],

[-5, -6]],

[[ 1, 1],

[ 1, 0],

[ 1, -1],

[ 1, -2],

[ 1, -3],

[ 1, -4],

[ 1, -5],

[ 0, 1],

[ 0, 0],

[ 0, -1],

[ 0, -2],

[ 0, -3],

[ 0, -4],

[ 0, -5],

[-1, 1],

[-1, 0],

[-1, -1],

[-1, -2],

[-1, -3],

[-1, -4],

[-1, -5],

[-2, 1],

[-2, 0],

[-2, -1],

[-2, -2],

[-2, -3],

[-2, -4],

[-2, -5],

[-3, 1],

[-3, 0],

[-3, -1],

[-3, -2],

[-3, -3],

[-3, -4],

[-3, -5],

[-4, 1],

[-4, 0],

[-4, -1],

[-4, -2],

[-4, -3],

[-4, -4],

[-4, -5],

[-5, 1],

[-5, 0],

[-5, -1],

[-5, -2],

[-5, -3],

[-5, -4],

[-5, -5]],

[[ 1, 2],

[ 1, 1],

[ 1, 0],

[ 1, -1],

[ 1, -2],

[ 1, -3],

[ 1, -4],

[ 0, 2],

[ 0, 1],

[ 0, 0],

[ 0, -1],

[ 0, -2],

[ 0, -3],

[ 0, -4],

[-1, 2],

[-1, 1],

[-1, 0],

[-1, -1],

[-1, -2],

[-1, -3],

[-1, -4],

[-2, 2],

[-2, 1],

[-2, 0],

[-2, -1],

[-2, -2],

[-2, -3],

[-2, -4],

[-3, 2],

[-3, 1],

[-3, 0],

[-3, -1],

[-3, -2],

[-3, -3],

[-3, -4],

[-4, 2],

[-4, 1],

[-4, 0],

[-4, -1],

[-4, -2],

[-4, -3],

[-4, -4],

[-5, 2],

[-5, 1],

[-5, 0],

[-5, -1],

[-5, -2],

[-5, -3],